What is content moderation?

Content moderation is the process of monitoring and controlling user-generated content to align with community guidelines, legal regulations and platform standards. It includes reviewing and managing posts, comments, videos, images and other non-affiliated content.

Content moderation aims to create a positive online environment where users can engage confidently and platforms can maintain their reputation. Moderation combines human moderators and automated tools. Human moderators evaluate content based on guidelines, while automated tools flag potentially problematic content for review.

Content moderation is now crucial in addressing issues like misinformation, cyberbullying and online harassment. To prevent the spread of harmful narratives and false information, social media platforms and websites must prioritize efficient content moderation.

Let's understand how businesses moderate content for healthy online interactions and better customer experience.

Popular content moderation techniques

Content moderation techniques vary to cater to the needs of online platforms and communities. Some common techniques include:

Pre-moderation: Human moderators review and approve content before it’s published to ensure that only appropriate content is visible to users. While this approach guarantees content quality, it may result in slower publishing times.

Post-moderation: Content is published immediately and reviewed by moderators after being flagged by users or filters. This ensures quick publishing but may temporarily show inappropriate content.

Reactive moderation: Moderators play a vital role in maintaining a healthy online environment. They promptly address user reports and complaints, taking immediate action against inappropriate content once it's posted. This active approach relies on users to flag problematic content, ensuring a safe and productive online community.

Hybrid moderation: Some platforms combine AI algorithms and human moderators to balance efficiency and accuracy. For instance, AI can handle real-time scanning, while the human moderators can tackle complex or nuanced cases.

Self-moderation: Empower users to take control of content moderation. They can report inappropriate content and vote on user-generated content (UGC), fostering a sense of user responsibility and community engagement.

Shadow moderation: Platforms use "shadow" users to assess and rate content. This helps in training AI algorithms and providing insights into content quality.

With numerous types of moderation techniques available, you can choose a moderation technique that aligns with your business goals, encourages meaningful interactions and drives digital success.

How content moderation benefits different business workflows

Content moderation is essential for businesses. It provides numerous advantages to different workflows and ensures a positive digital customer experience. So, let’s check out all the pros.

Safeguard brand reputation

In the bustling world of e-commerce and social media, a brand's reputation is everything. Imagine the chaos that could ensue if offensive or harmful content were left unchecked. It would tarnish a brand's image and credibility.

While you can't control opinions, you can moderate what's posted. With a team of moderators, you can prevent offensive stuff from reaching your site, safeguarding your audience from negativity and preserving your brand image.

Take this scenario, for example. A popular fashion retailer proudly promotes body positivity and inclusivity. However, without proper content moderation, the retailer's social media platforms become a breeding ground for body-shaming comments and offensive content.

Customers start to notice these hurtful remarks and share their concerns, causing a ripple effect of negative sentiment.

Realizing the urgency, the retailer swiftly removes offensive comments, blocks harmful users and actively encourages a supportive online community to restore a positive atmosphere and show its commitment to inclusivity.

Ensure compliance

In the complex world of regional regulations and legal requirements, businesses find a reliable ally in content moderation.

By adhering to guidelines on prohibited content, privacy and data protection, platforms can avoid legal troubles that could otherwise spell disaster for their operations.

For example, in recent years, YouTube faced a major controversy when brand advertisements were found appearing alongside extremist and inappropriate content on the platform.

Many well-known brands, unaware of where their ads were being displayed, were associated with content that went against their values and guidelines.

This lack of content moderation not only damaged the reputation of these brands but also raised concerns about ad placements on platforms like YouTube.

Advertisers pulled their campaigns, and YouTube had to take swift action to improve its content moderation practices. This incident highlighted the significant impact that inadequate content oversight and compliance can have on a brand's image and partnerships.

Reduce legal liabilities

Content moderation plays a pivotal role in mitigating legal liabilities for platforms. By carefully monitoring and removing content that violates regulations and legal requirements, platforms can avoid potential legal disputes and maintain their integrity.

For example, consider a user-generated video-sharing platform that boasts a vast and diverse user base. Content moderation diligently scans uploaded videos to detect copyrighted material, flagging potential issues before they escalate.

By promptly addressing copyright violations, the platform prevents legal disputes with content creators and copyright holders. This preserves its standing as a responsible and law-abiding digital entity.

Improve advertising opportunities

For businesses and advertisers, content moderation creates a virtuous cycle. Brands seek platforms with robust content quality standards to ensure their advertising campaigns are displayed in a safe environment. By firmly moderating content, platforms attract advertisers by providing a fertile ground for promoting their products and services.

Imagine your favorite lifestyle magazine website. It's not just a place to read – it's a source of ideas, inspiration and ads. When high-quality content is paired with relevant ads, it benefits both advertisers and readers. Advertisers can reach an engaged audience and readers receive ads that resonate with their interests.

Content moderation is critical for maintaining high-quality content and attracting advertisers. When the magazine site ensures that the content is clean and free from irrelevant material, it becomes an appealing platform for advertisers to promote their products to an interested and engaged audience.

Learn More: Go Global: How to Scale Your Content Marketing Strategy for International Markets

With the numerous benefits that content moderation provides, businesses must prioritize content moderation. To streamline the process, AI is being actively used, which reduces the workload of human moderators and allows them to concentrate on more intricate tasks.

Future of content moderation with AI

AI technology is revolutionizing content moderation. Let's take a look at how AI will continue to impact and transform this field:

Increase in scale of moderation: With advanced algorithms and AI technology, platforms can now efficiently moderate user-generated content in real-time, thanks to the ability to scan and process diverse content at scale.

Multilingual support: AI-driven content moderation ensures that online spaces are inclusive and accessible to users of all languages.

Detect evolving threats: AI technology helps to detect and combat emerging threats, like deepfakes. It helps to proactively address malicious trends and ensures user safety online.

Best practices for content moderation in 2023 and beyond

To ensure a safe and inclusive digital environment in 2023 and beyond, it’s essential to implement robust best practices for content moderation. Follow these essential guidelines for success.

Transparent community guidelines

Transparency is crucial for fostering a positive and accountable community. It allows users to understand the rules and expectations.

Pro Tip: Encourage a positive environment by offering incentives and rewards for valuable contributions.

Respect for freedom of speech

To ensure safe content, platforms need to strike a balance between freedom of expression and maintaining a healthy environment. This means promoting constructive discussions while strictly prohibiting hate speech and harmful content.

Pro Tip: Set clear community guidelines to promote open and respectful discussions.

Proactive moderation

Automate your scanning process and use keyword filtering to detect and contain harmful content. By taking a proactive approach to moderation, you can prevent issues from getting worse and ensure the integrity of your platform.

Pro Tip: Keep your systems up to date to stay ahead of emerging trends.

User reporting mechanism

Implement a user-friendly reporting mechanism where users can flag inappropriate content and promptly respond to reports to maintain trust.

Pro Tip: Promote effective reporting system usage and promptly review reported content to maintain user confidence in your content moderation.

Monitor bias in AI models

Regularly monitor AI algorithms for biases to ensure fair content moderation and avoid discriminatory outcomes. Respect diversity by implementing measures to address any biases that may arise.

Pro Tip: Collaborate with AI experts to enhance and optimize your moderation algorithms for more accurate and unbiased results.

How Sprinklr can help you with your content moderation efforts

You can enhance your content moderation with Sprinklr to create a safer and more engaging online experience for your brand and audience. You can also ensure compliance with global brand standards and proactively moderate content before publishing it.

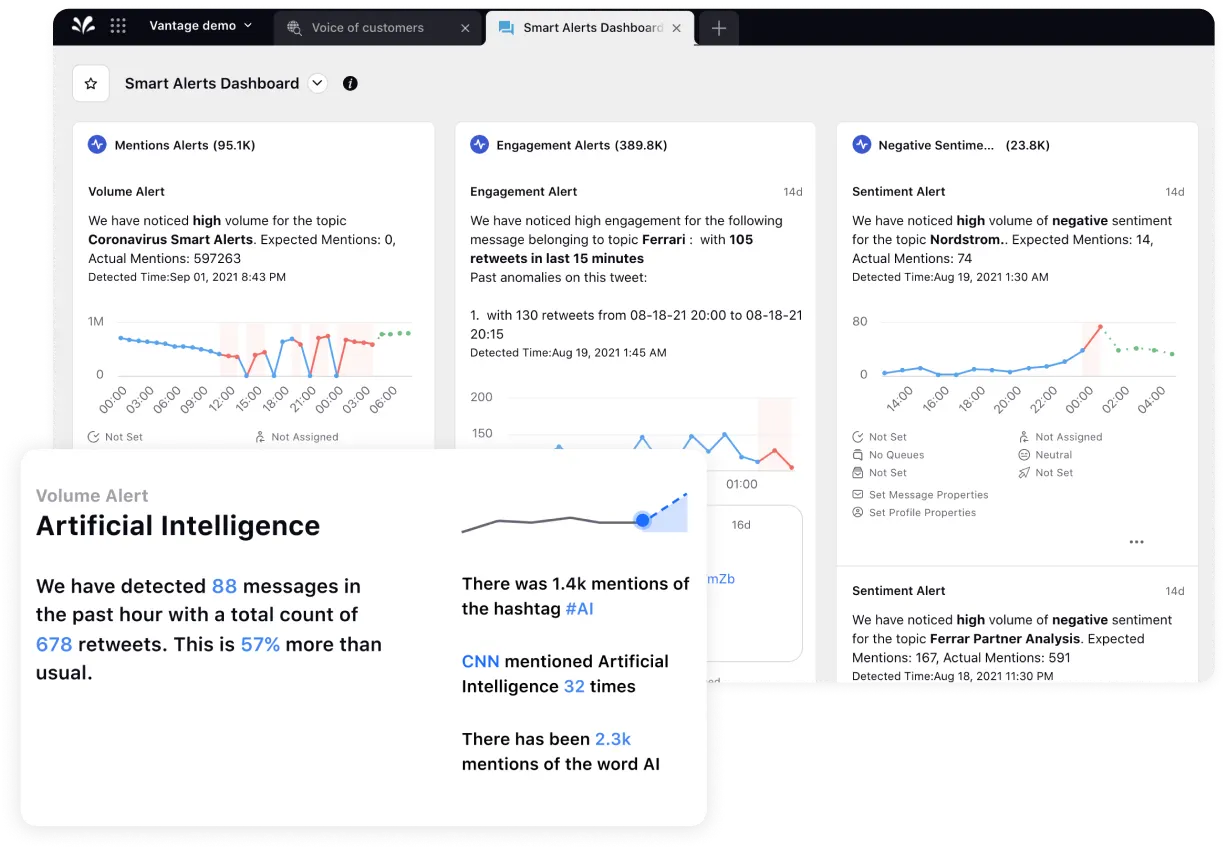

Sprinklr's AI-powered listening tool enables the quick identification of opportunities and challenges on social media, and it also helps avert crises in a timely manner.

What’s more, its social media automation capabilities allow you to manage your social media assets from a single place so your content stays top-notch and your brand image stays pristine.

And that’s not all – its Smart Compliance feature makes it super-easy to filter and curate UGC by flagging spam and inappropriate content in no time. This way, your life will be a whole lot easier as you can leave these mind-numbing tasks to the power of advanced AI.

Frequently Asked Questions

AI-powered proactive moderation helps platforms in quickly detecting and addressing emerging threats. With automated scanning and keyword filtering, platforms can effectively stay ahead of malicious content creators.

Thank you for contacting us.

A Sprinklr representative will be in touch with you shortly.

Contact us today, and we'll create a customized proposal that addresses your unique business needs.

Request a Demo

Welcome Back,

No need to fill out any forms — you're all set.

![10 AI Writing Prompts In Content Marketing [2024]](https://images.ctfassets.net/ukazlt65o6hl/7BXUq8Ns0vCgornXApT0Uz/572755ba099aecf279675903caf526b6/Blog_Banner_Image.png?w=750&h=505&fl=progressive&q=70&fm=jpg)