Bulk Prediction

Updated

Note: Before getting started with bulk prediction, ensure you familiarize yourself with the process of Creating a Smart FAQ Model.

Overview

The bulk prediction feature allows you to generate predictions for multiple queries at once on your Smart FAQ models. This functionality streamlines the process by enabling you to handle a large volume of queries simultaneously, saving both time and effort while delivering predictions for multiple inputs in a single action.

Configure bulk prediction

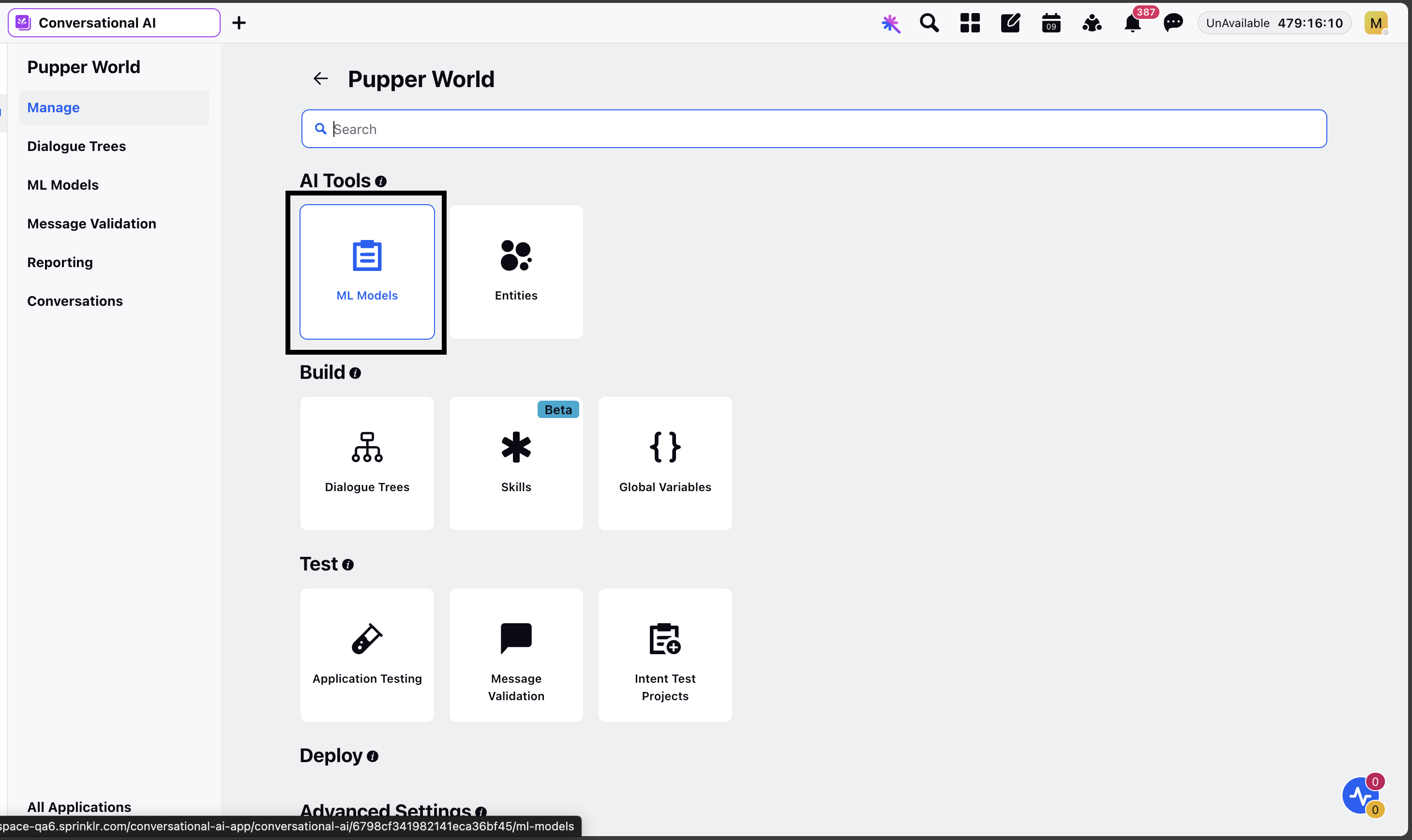

Within your conversational AI Smart FAQ application, click on ML Models under AI tools.

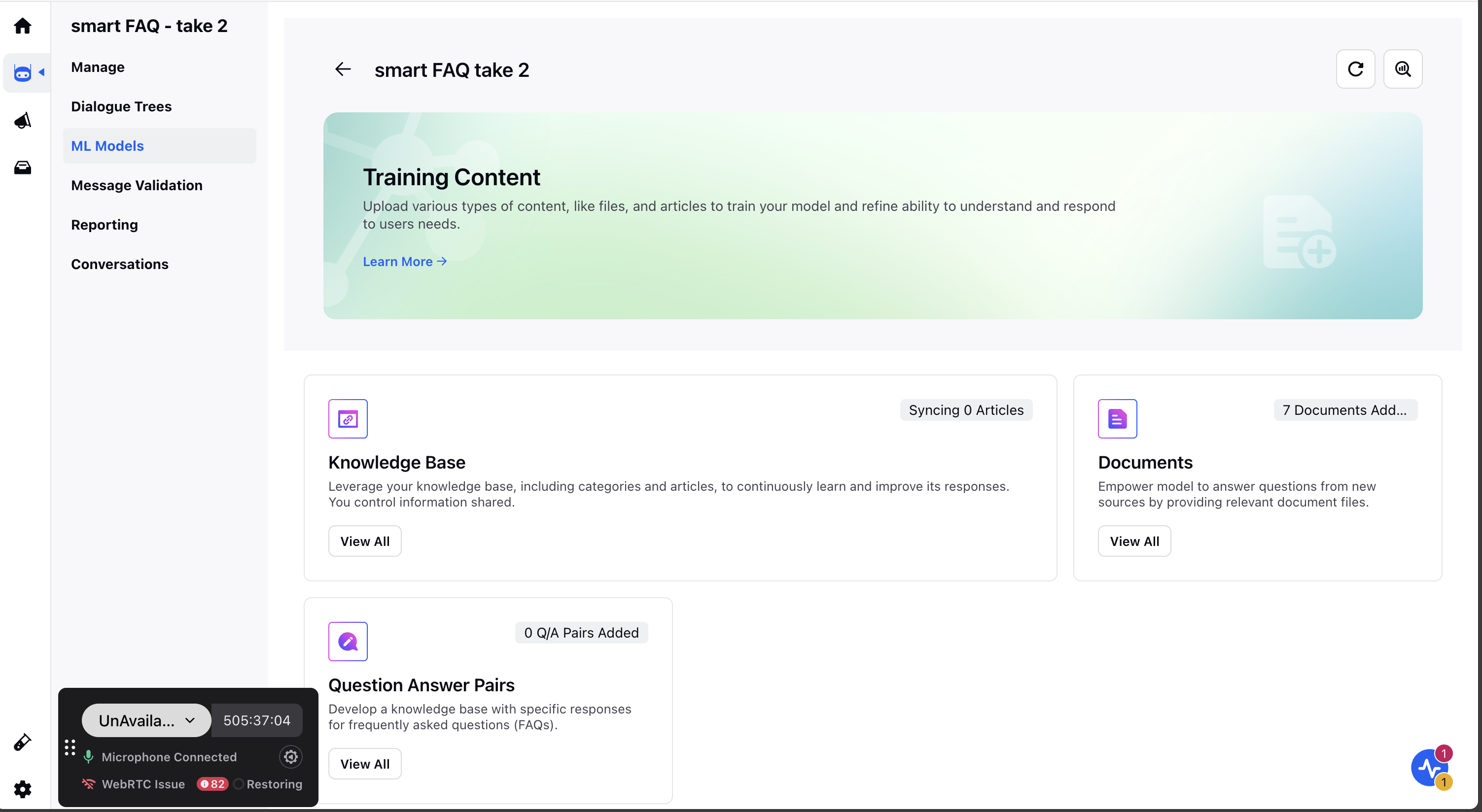

On the ML Models window, click the application containing the file to be downloaded. A Content Sourcing page will open.

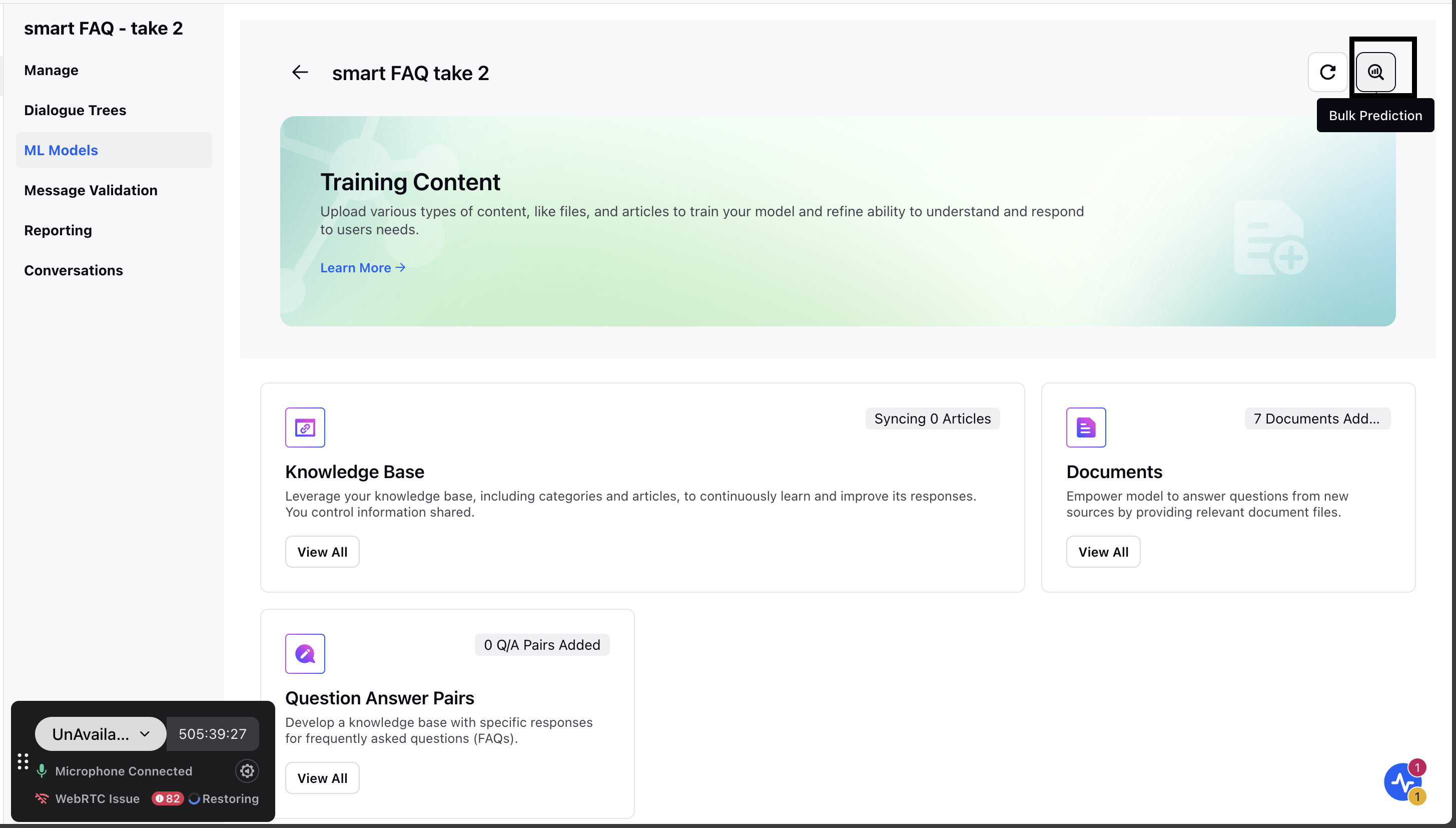

Navigate to Content Sources: Click the Bulk Prediction icon in the top-right corner of the Content Sources page.

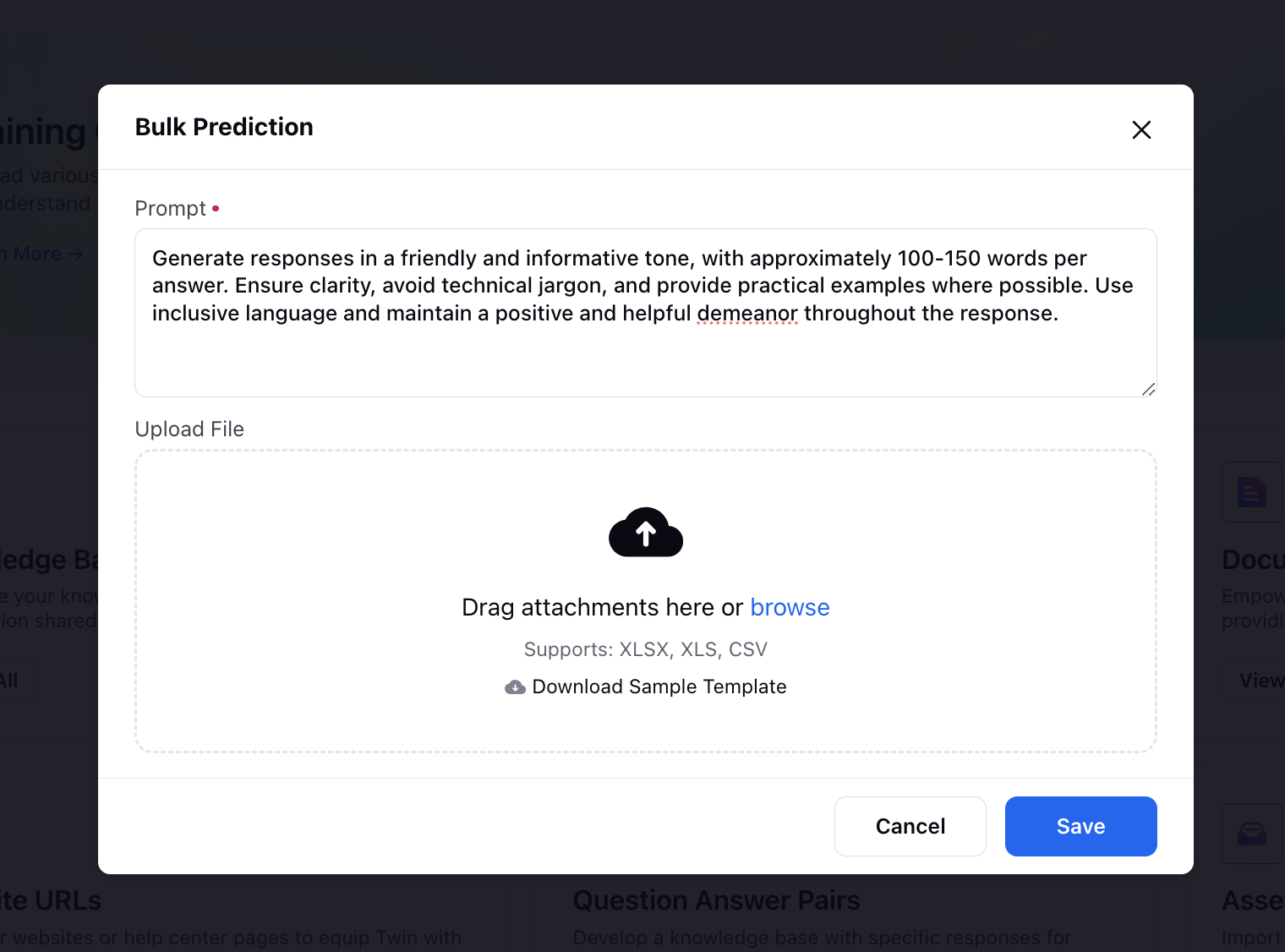

Add a Prompt: Add a prompt to guide the model in generating answers, specifying details such as the desired tone, word count, and any additional specifics.

Upload Queries: Upload an Excel file containing all your queries. You can also download a sample file to see the required format.

Save and Download: Click Save. You will receive a platform notification with the link to download a file with predictions for all your queries.

Output

Once you get the Bulk prediction in the output, the Output Excel file will contain the following columns:

DOCUMENT_CONTENT- Question or prompt given by the user.

Answer- The answer which the user wants to publish at the end.

Answer with Citation- This field returns the output answer with the citation of sources

Sources- The sources which were fetched

Context- This field displays the chunks from the KB which were fetched.

Question- This field contains the reworded question

Raw Response- This field contains the API Response.