Automated Testing of Bulk Cases

Updated

Before you begin:

To prepare for Automated Quality Assurance (QA) testing, ensure you have created a conversation that requires testing. Before adding a test case, take note of the case number associated with the conversation.

Note: To enable Automated QA, please contact tickets@sprinklr.com and provide the Partner ID and Name. If you are unsure of your Partner ID and Name, please work with your Success Manager. |

Overview:

Automated testing for Conversational AI aims to assess the performance of the bot across diverse input scenarios by executing a range of test cases. This approach enhances the efficiency, reliability, and expedites the deployment of Conversational AI by automating the testing process.

The framework presents user replies from chosen cases to the bots sequentially, enabling an evaluation of the bot's functionality. This method not only determines if the bot is operating correctly but also identifies potential bottlenecks and performance issues that may impact its overall effectiveness. The outcomes are then categorized as "Passed" if the bot performs as expected or "Failed" if issues arise

To Implement Automated QA

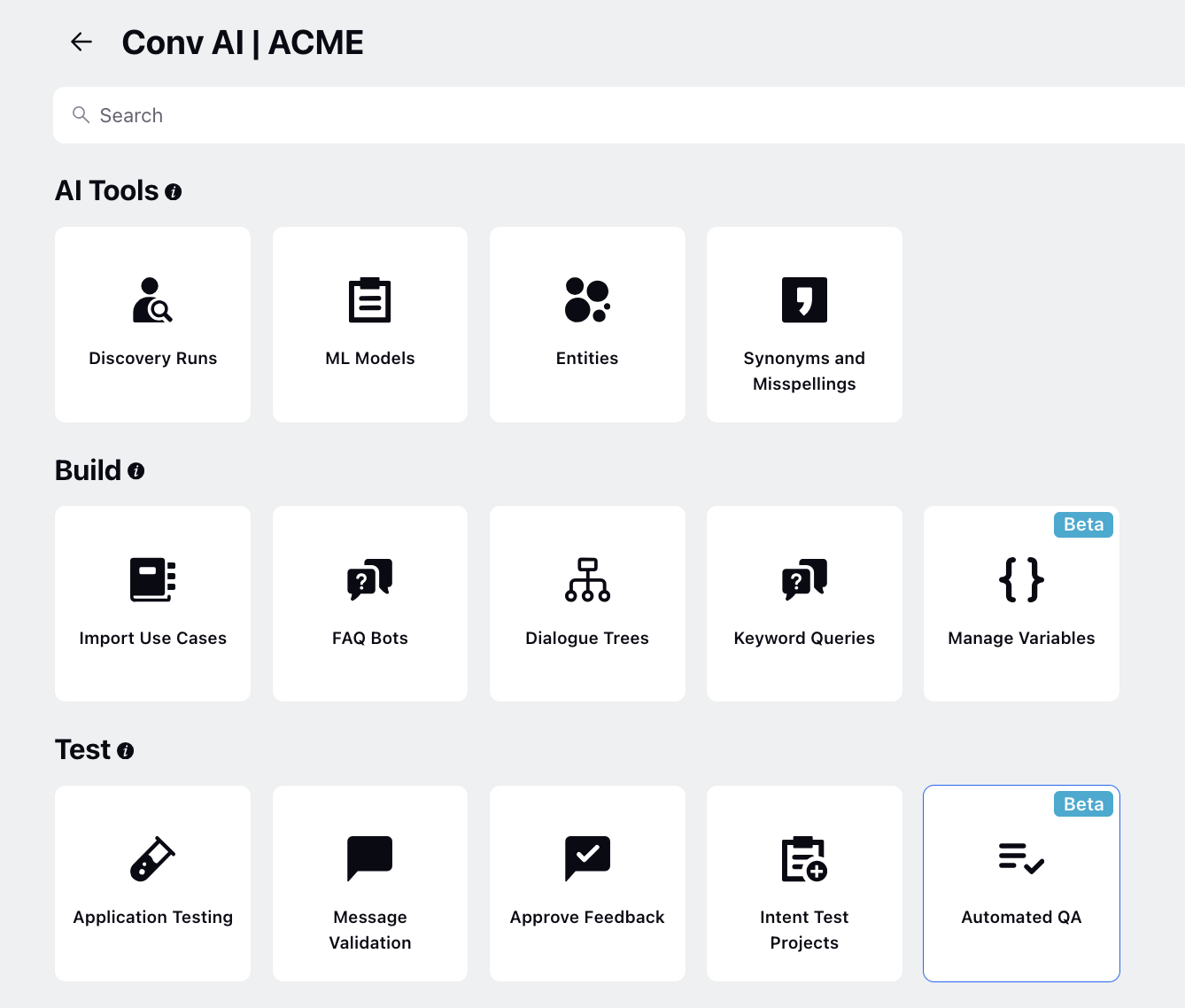

Open your Conversational AI application and select Automated QA within Test.

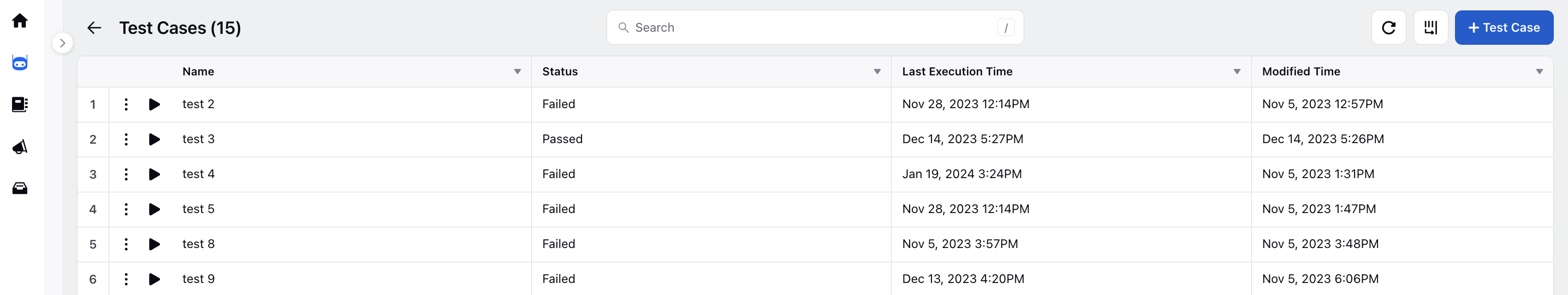

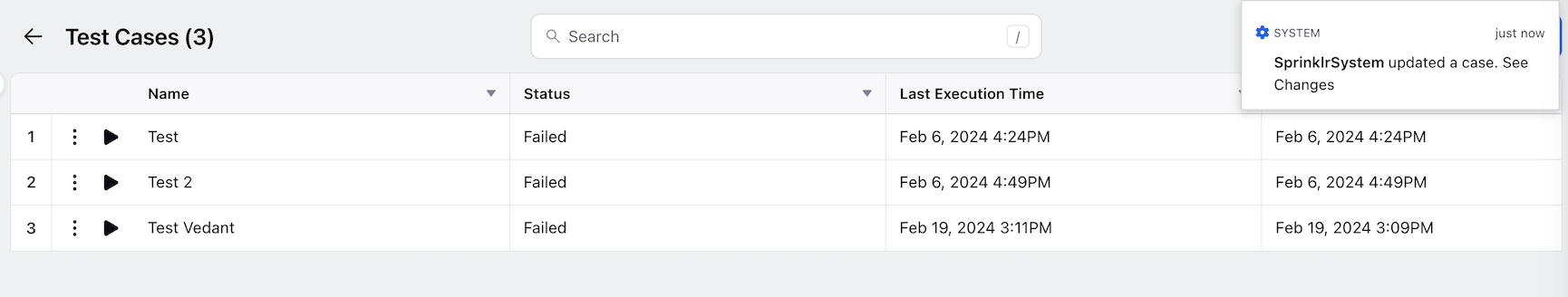

On the Test Cases window, click Test Case in the top right corner.

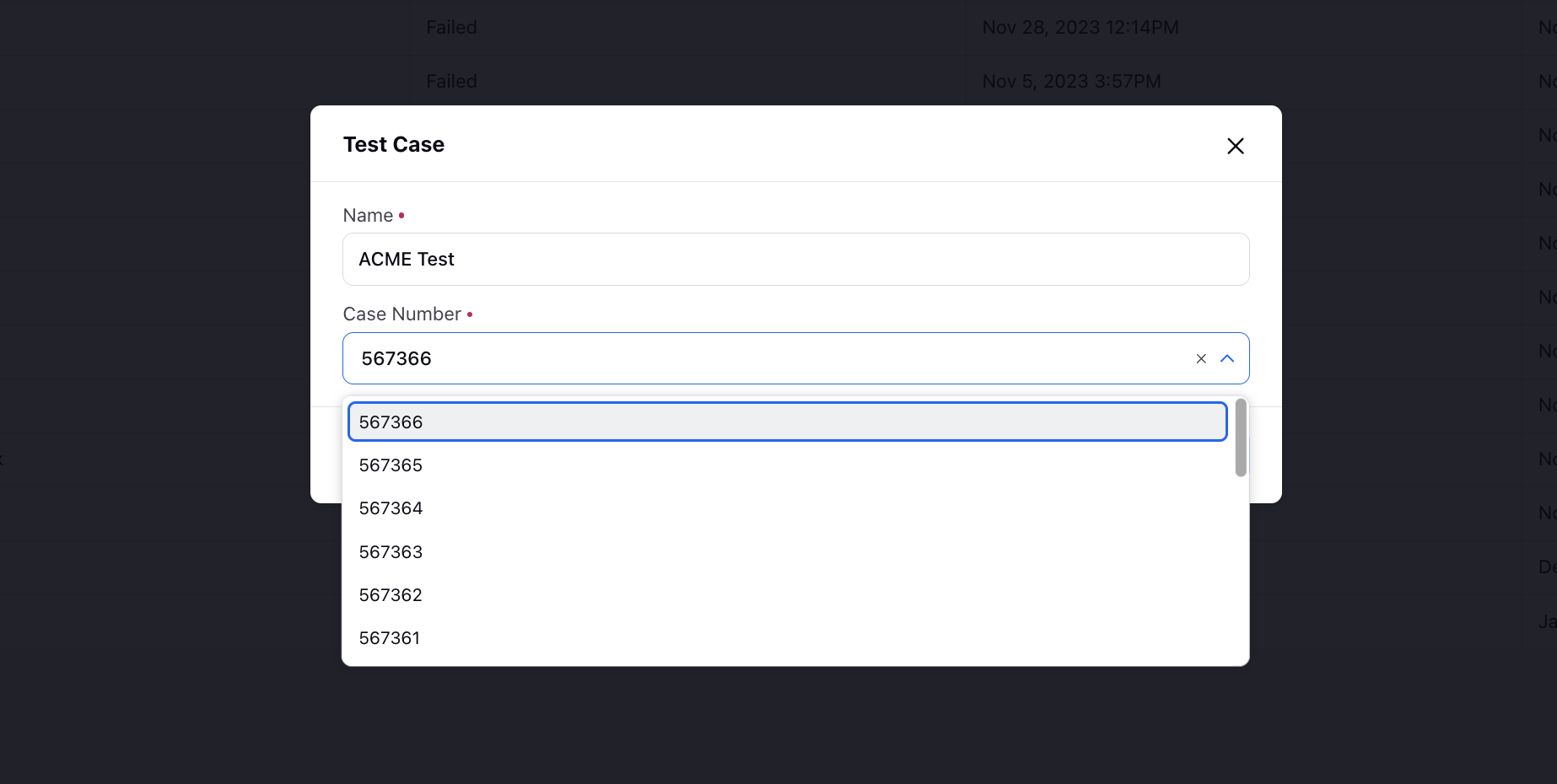

On the Test Case pop-up window, assign a name to the test you are setting up. Additionally, choose the specific case number(s) that you want the bot to run on. Click the Save button to store the test configuration.

Click on the play icon alongside the created test case to initiate the execution of the automated test.

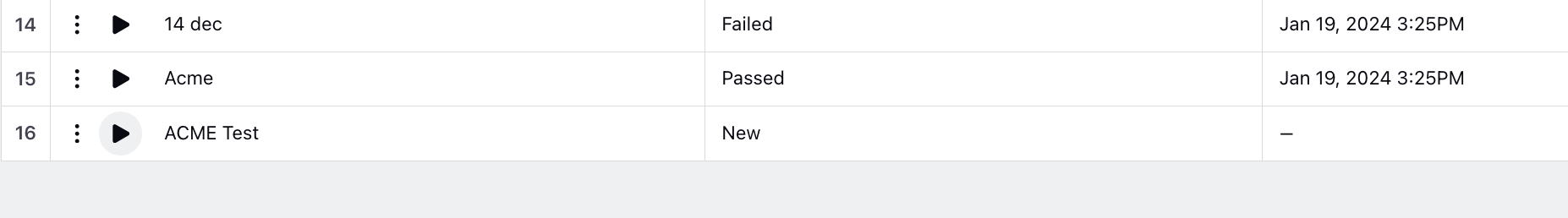

If the bot performs as expected during the test, the status will be marked as Passed. Conversely, if any issues arise or the bot fails to function correctly, the status will be marked as Failed.

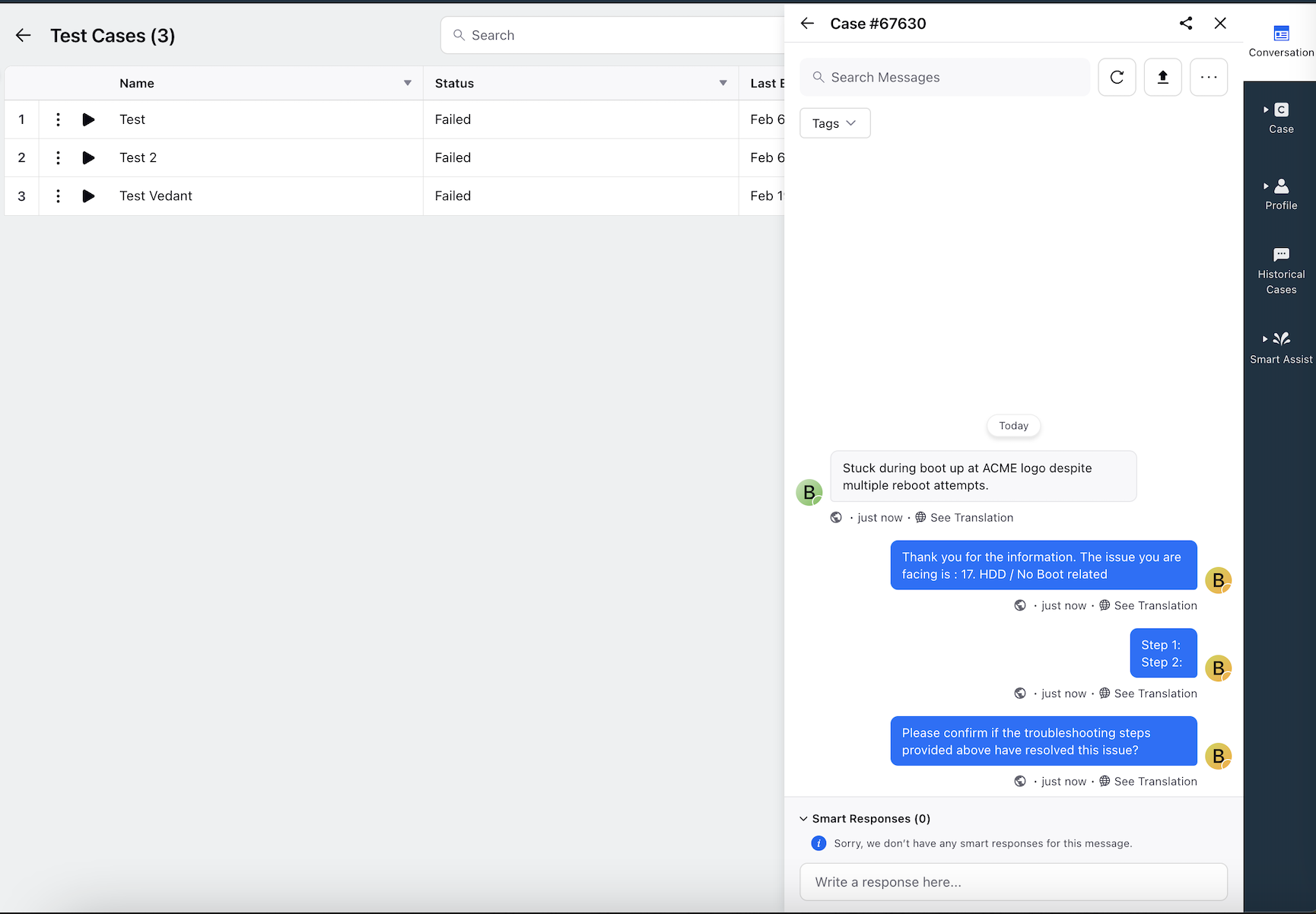

Additionally, a new case will be created. Click on the platform notification that you receive to open the Case Third Pane.

Here, you can walk through the conversation and debug any issues.

By following these steps, you can seamlessly employ Automated QA to assess and verify the performance of your Conversational AI, ensuring that it meets the desired expectations and standards.