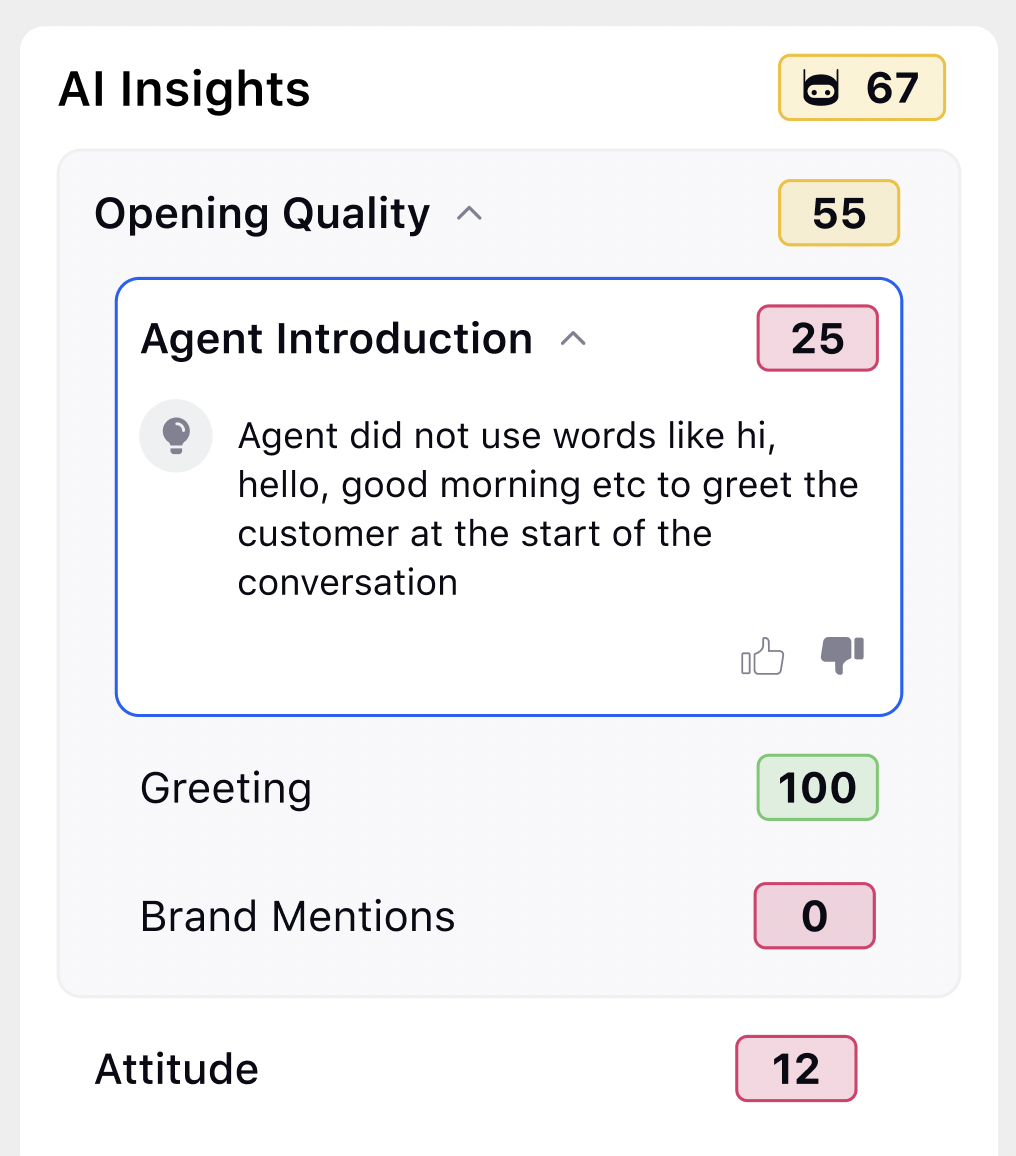

Feedback on AI Scoring in AI Insights

Updated

You can provide feedback on AI scoring to enhance the system's accuracy. This includes the ability to input the expected score for each parameter, report any incorrect evidence detected by the AI, and highlight any missing evidence that should have been identified. Your feedback is invaluable for training the AI, helping it deliver more precise results and ensuring continuous learning and improvement based on real user insights. This collaborative approach allows for a more refined scoring process that better reflects actual performance.

For feedback on AI scoring, you need to provide permission at the checklist level. To know about the details on permissions, refer Permissions on Record Manager.

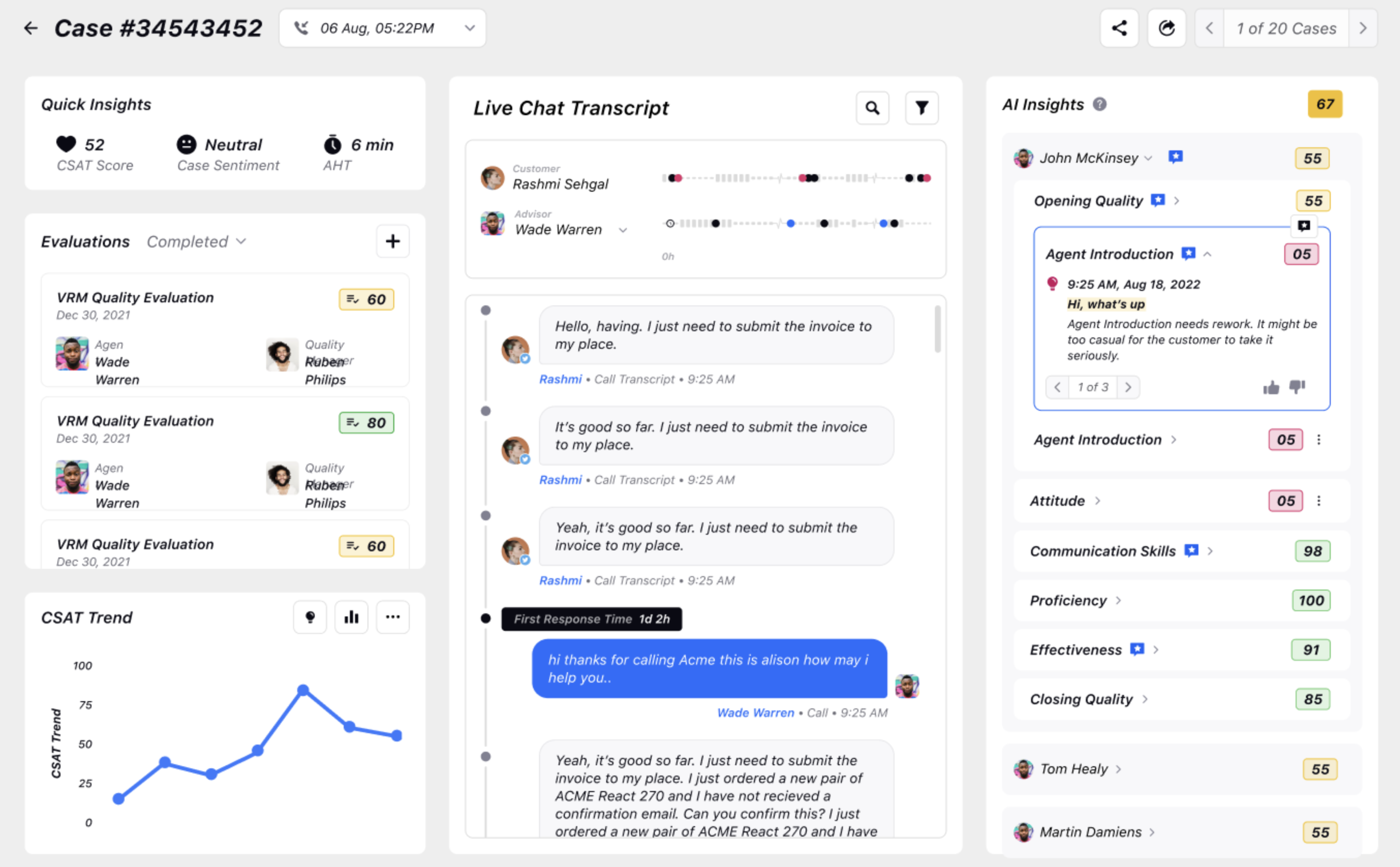

Providing Feedback on AI Scoring

To improve the accuracy of AI scoring, follow these steps to provide detailed feedback:

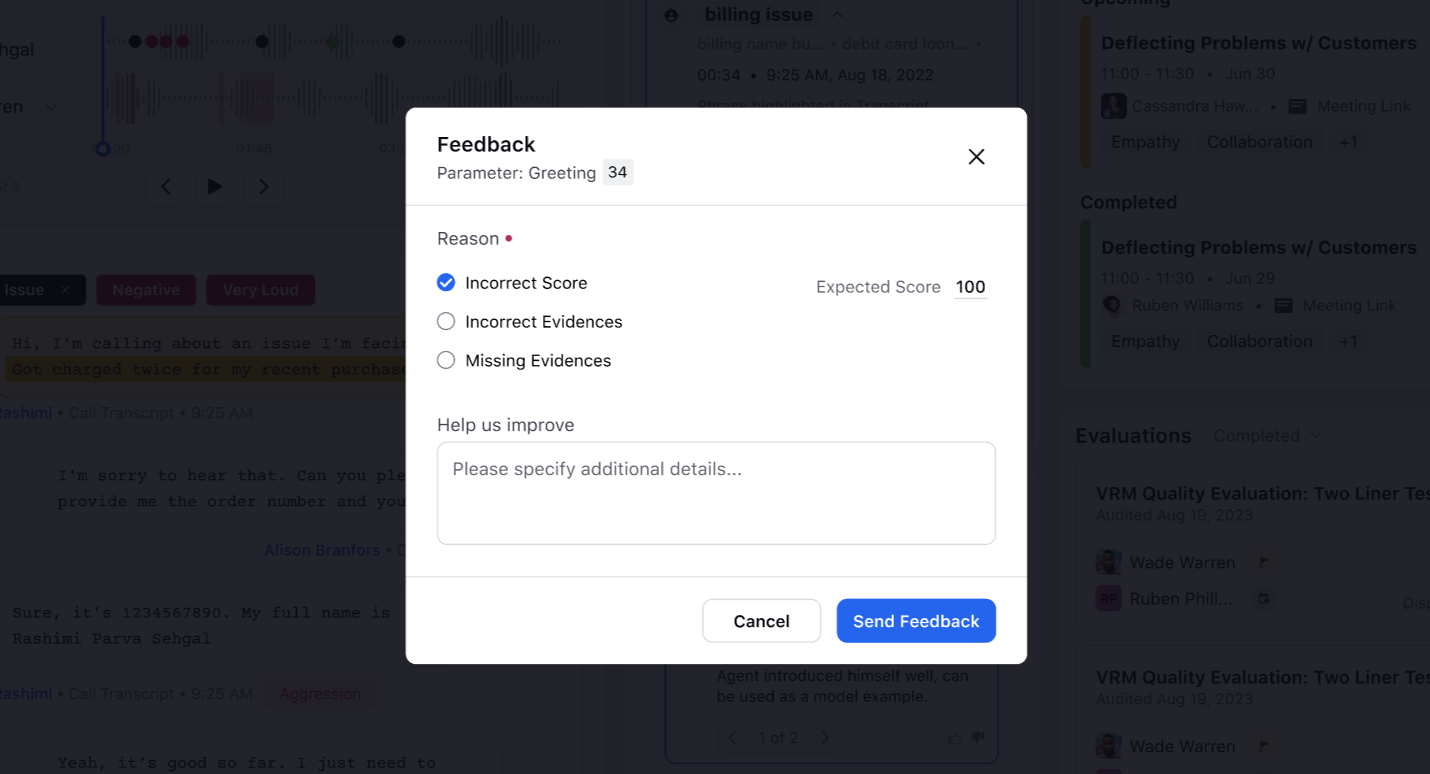

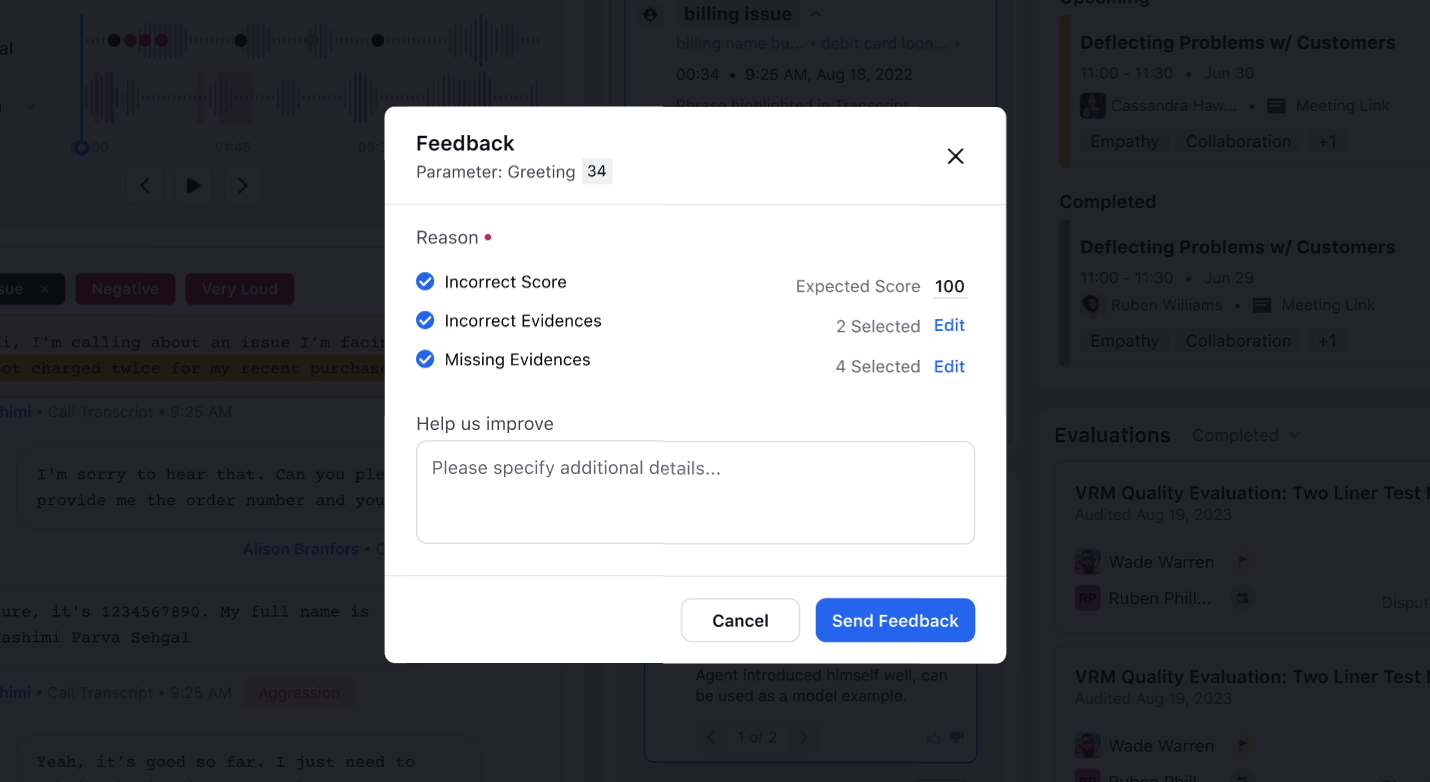

Select the thumbs down icon if you find the AI score to be inaccurate to initiate the feedback process.

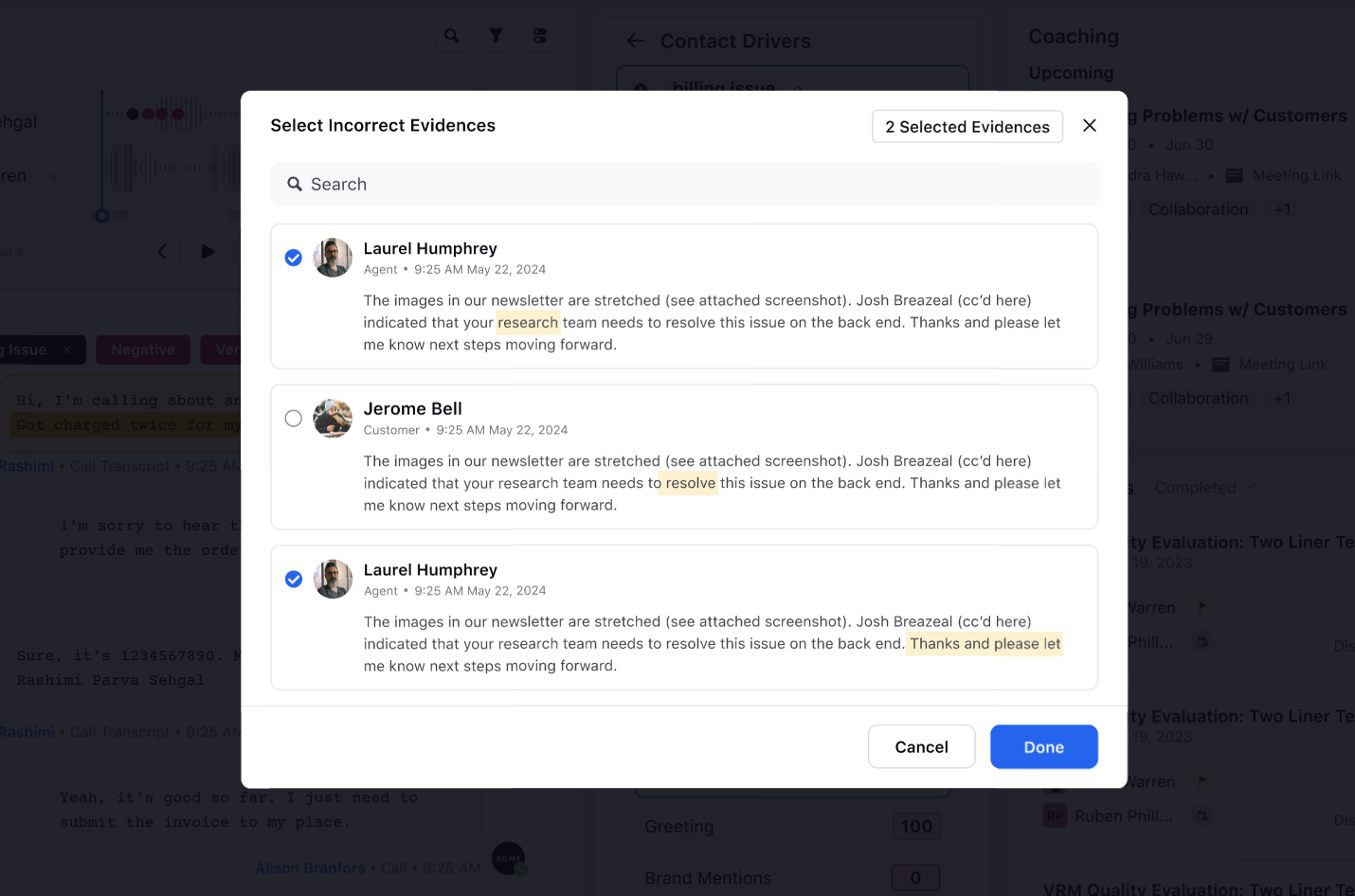

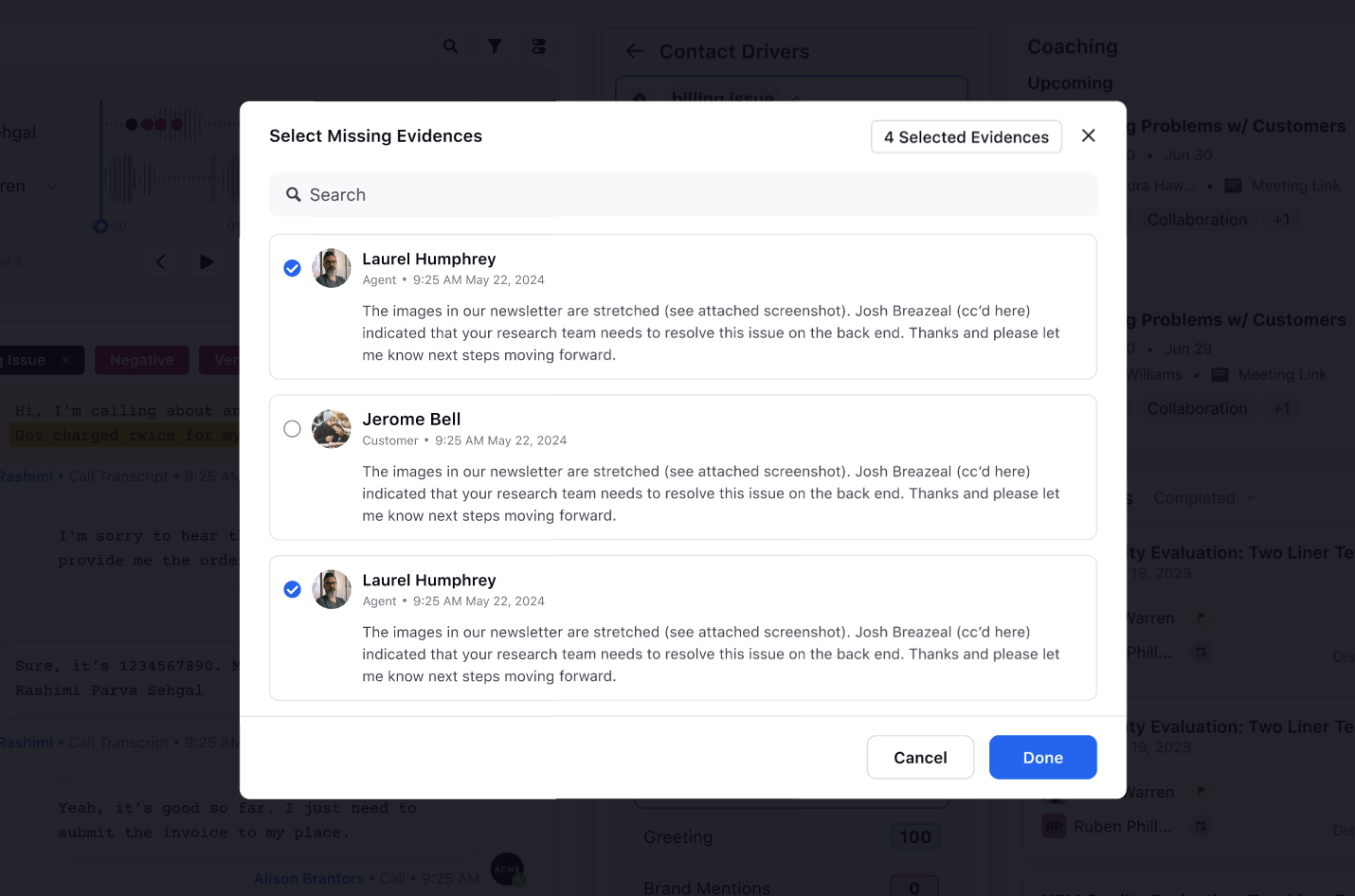

Choose a reason for your feedback:

Incorrect Score: For incorrect scores, indicate that the score assigned by the AI is incorrect and enter the correct score you believe should be assigned. For a single select list type incorrect score, the NA option always appears in the dropdown. For incorrect score type where the user has to input manually, NA won’t be available.

Incorrect Evidences: Review the list of evidences provided by the AI and select all that are incorrect.

Missing Evidences: Identify and select all evidences that the AI missed but needs to be included.

Once the evidences are selected, you have the option to edit them to ensure they accurately reflect the conversation or case details.

Provide any additional comments in the Help us improve comment box that can help clarify your feedback. This could include contextual information or specific details about why the AI's scoring was inaccurate. This comment box can be marked as mandatory or optional for each checklist item during the configuration done on the Mark Feedback Comment Mandatory checkbox of the Record Manager.

Click the Send Feedback button to submit your feedback. This feedback is used to train and improve the AI, leading to more accurate scoring in the future.

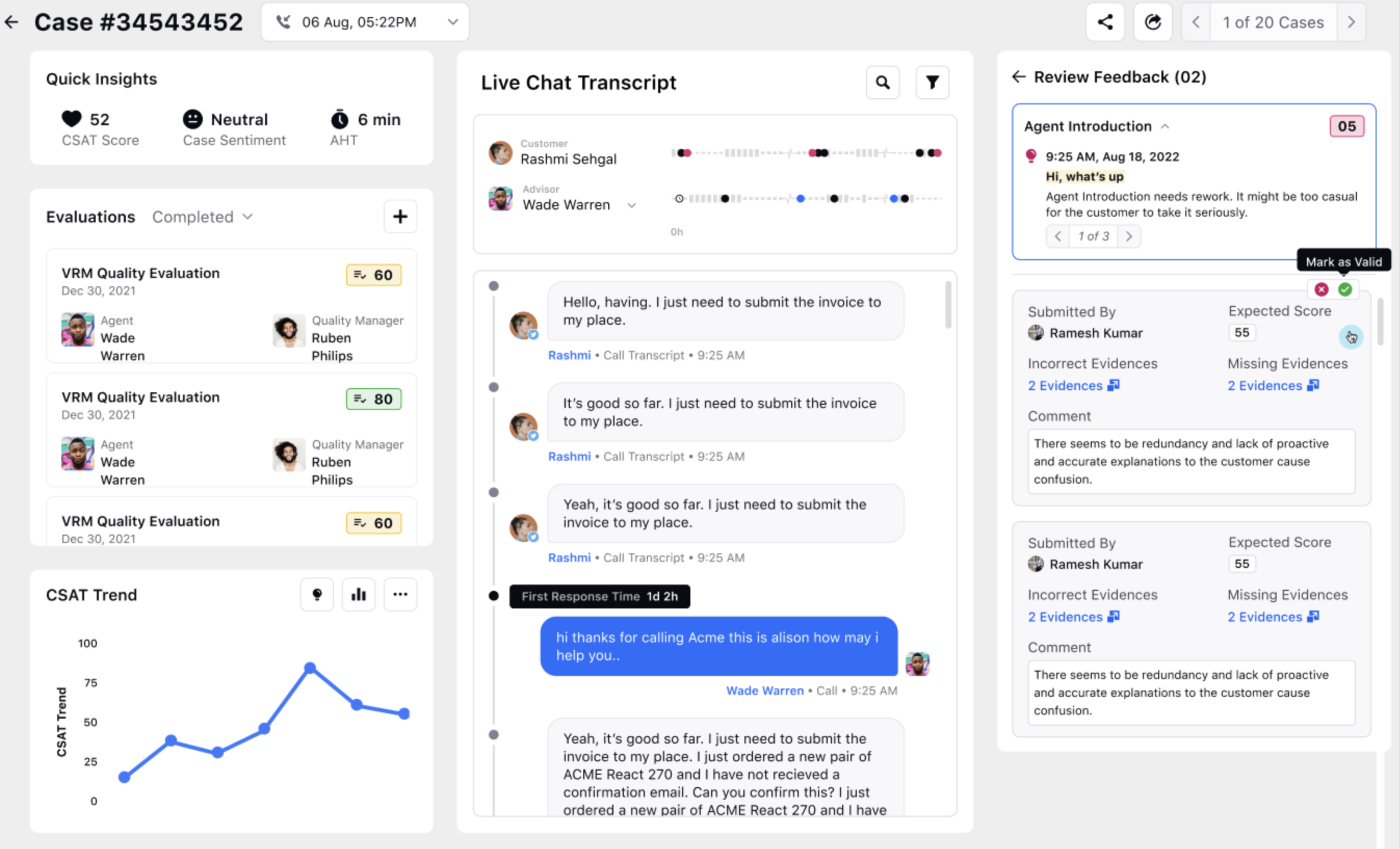

Feedback Review Flow

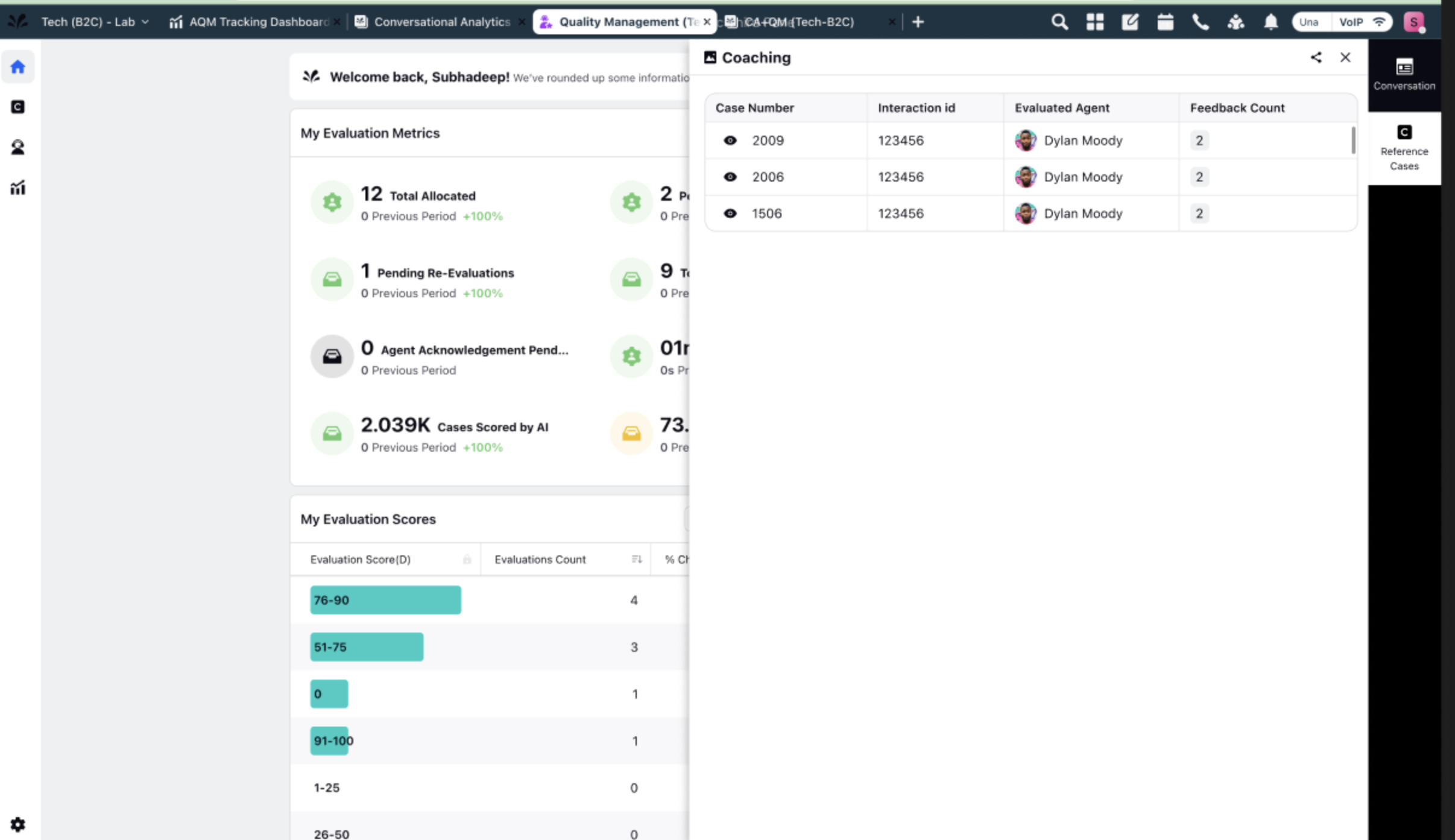

To maintain the quality and relevance of feedback used for process improvements, Quality Managers also have the ability to mark as valid or mark as invalid each feedback entry. This gatekeeping function helps ensure that only accurate and valuable feedback contributes to refining operational accuracy.

This enables teams to tailor the feedback process according to the criticality of specific evaluation parameters, promoting more consistent and meaningful input.

Feedback Approval Flow

In a checklist, you can mark the feedback provided by others as valid or invalid. Perform the following steps to approve or disapprove any feedback.

You can share approval on any of the feedbacks in two ways:

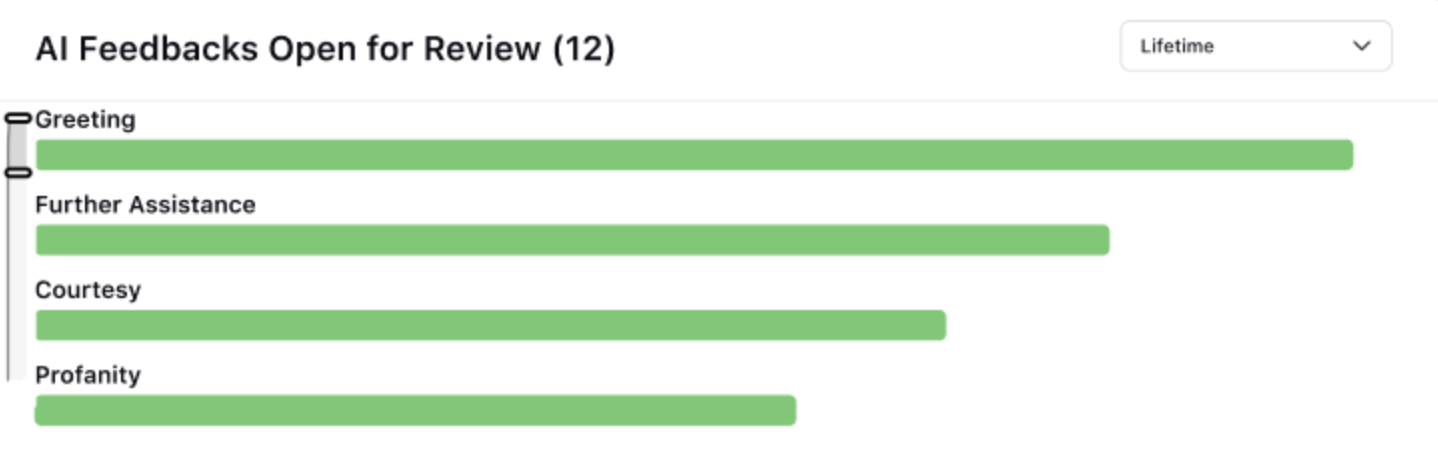

On the QM page, click AI Feedbacks Open for Review widget displayed on the screen.

The AI Feedbacks Open for Review widget has the itemized distribution of feedbacks where you have to mark it as valid or invalid.

Click on any of the feedback.

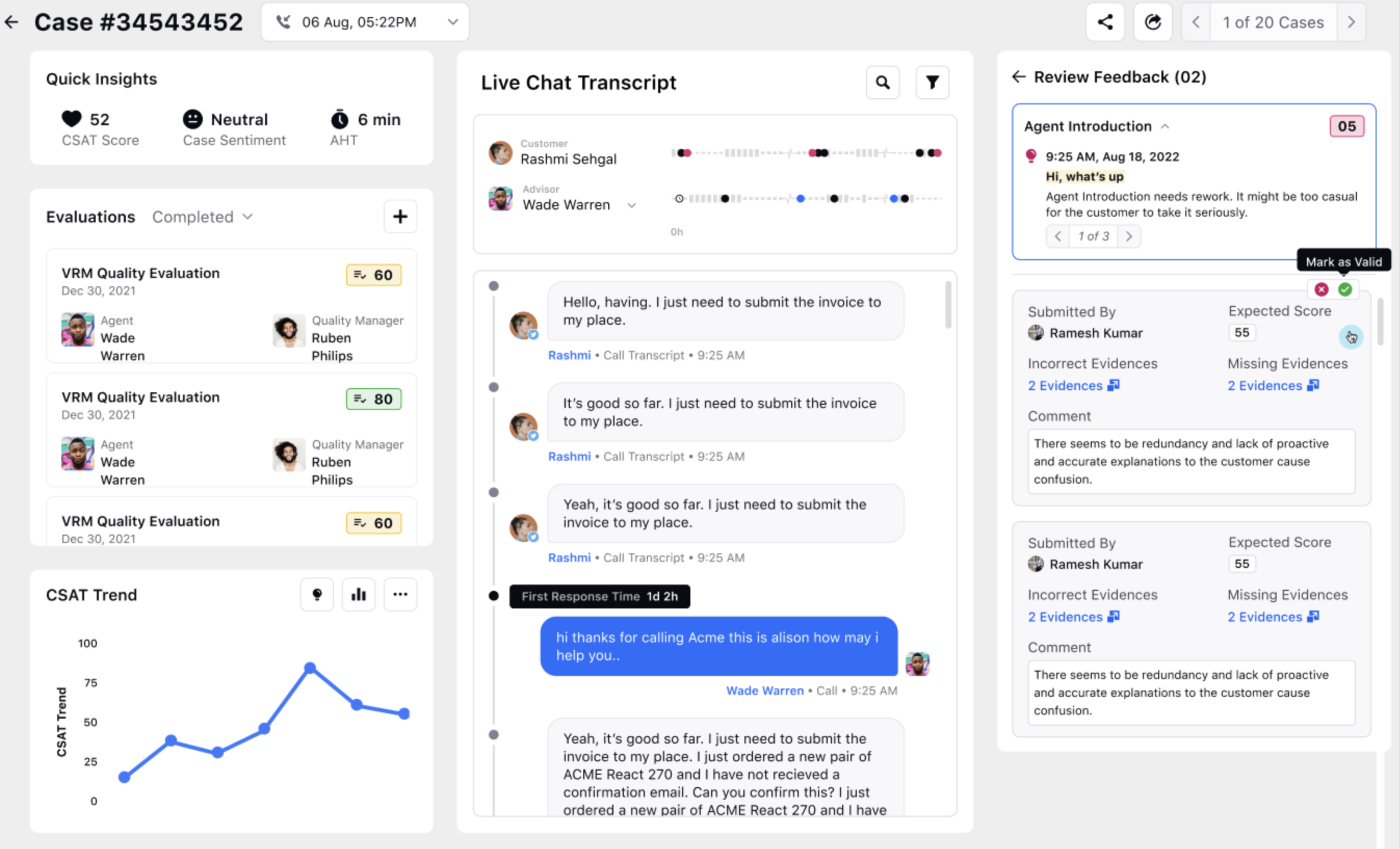

A virality pane for Reference Cases tab screen is displayed.

Click on the case for which you want to see the feedback.

The case screen is displayed with the Review Feedback window at the top right corner of the screen. The Review Feedback window has the list of all the feedbacks shared on this case.

OR

On the Case Analytics page, click on the CTA tooltip appearing under the Review Feedback window at the top right corner of the screen.

The Review Feedback window containing the list of all the feedbacks is displayed.

Tick the feedback, which appears to be a valid feedback for you and cross the feedback which appears as an invalid feedback. You get an option to mark each feedback as valid and invalid.

The feedback that is marked as valid gets removed from the Review Feedback window and only the ones marked as invalid continue to be displayed on the screen under the Review Feedback window.

Behavior on Re-running the Audit

If Re-run on the Same Checklist:

Both scores and insights are compared at the parameter level.

If the parameter score and the insights are the same, the feedback is retained.

If the parameter score or any of the insights differ, the feedback is deleted for that parameter.

The check on insights are at the individual insight level, not just the count of insights.

Scores alone won't be the criteria for retaining feedback since users can provide feedback through insights only.

If the Parameter is Deleted from the Checklist Builder:

Feedback are deleted for the deleted parameter.

If Re-run on a Different Checklist:

All feedback records are deleted.

Reporting on Feedback

Refer to the following table for the details on dimensions and metrics for Automated Scoring Feedback.

Dimension/Metrics

| Description |

AI Score Feedback Type | Feedback Provided is Positive or Negative. |

AI Score Feedback Reason | The feedback reason selected by the user providing the negative feedback. |

AI Score Feedback Provided by | The name of the user who provided the feedback. |

AI Score feedback provided on | The date and time at which the user provided the feedback. |

Incorrect Evidences | Insights selected in the feedback that are incorrectly detected. |

Missing Evidence | Messages selected in the feedback where insight should have been detected |

Comment | Comment inputted by the user in the feedback. |

Incorrect Score | Expected Score of the checklist item on which feedback is provided. |

Case Number | The case number. |

Checklist Item Feedback Count | The total number of feedback provided on the checklist item. |

Checklist Item | The checklist item on which feedback is provided |

Checklist Item Score | The AI score on the checklist item. |

Checklist Item Variance | The difference between the feedback score and the original score for the checklist item. |

Feedback Interaction Id | The interaction ID of the case interaction on which the feedback is provided |

Workspace | Indicates the workspace to which the case is associated. |

Account | The name of the social network account. When used with case details, this will display the name of the "Brand Account" through which the first message associated to |

Social Network | Provides the name of the social network associated with the interaction. |

Case Level Custom Fields | <The custom fields associated with the case on which feedback is given>. |

Feedback Validation Status | Indicates whether the feedback has been reviewed and marked as valid, invalid, or is still under review. If the approval workflow is not enabled, all feedback is marked as valid by default. Positive feedback is always considered valid. |

Feedback Reviewed by | The user who marked the feedback as valid or invalid. In case of auto approval, it should show Sprinklr System. |

Feedback Reviewed at | The date and time on which the user marked the the feedback as valid or invalid. In case of auto approval, it should show time at which feedback was provided. |