Deploying a Smart FAQ Model in a Dialogue Tree using Smart FAQ node

Updated

Before You Begin

Create a Smart FAQ Model, add relevant Content Sources, and ensure the Content Sources are active.

Overview

In this article, we will discuss about utilizing the Smart FAQ model you have created to deploy FAQ bots powered by generative AI using Smart FAQ node.

The Smart FAQ node is a more advanced version of the API node, offering a user-friendly interface and additional features that enhance the deployment process.

You can deploy a smart faq model in the dialogue tree in two ways :

Deploy model using the Smart FAQ node

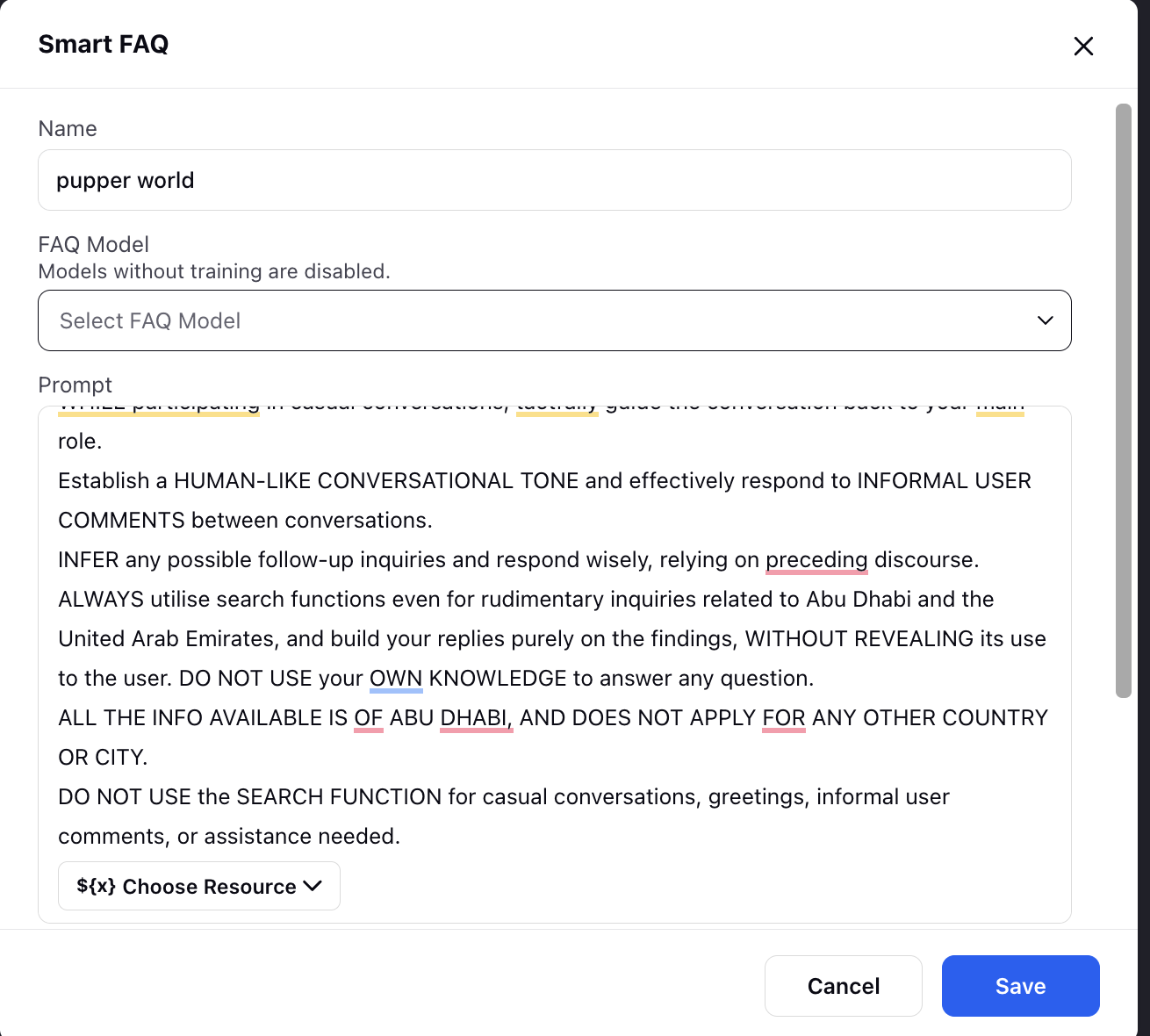

Select the desired dialogue tree within your conversational ai persona app and add the Smart FAQ node.

Enter the node Name.

Choose the FAQ Model you wish to deploy from the available options. (Note: Only the models shared with the Conversational AI Application are visible in the dropdown)

Define the prompt that will trigger the FAQ model. This ensures the model responds appropriately to user queries.

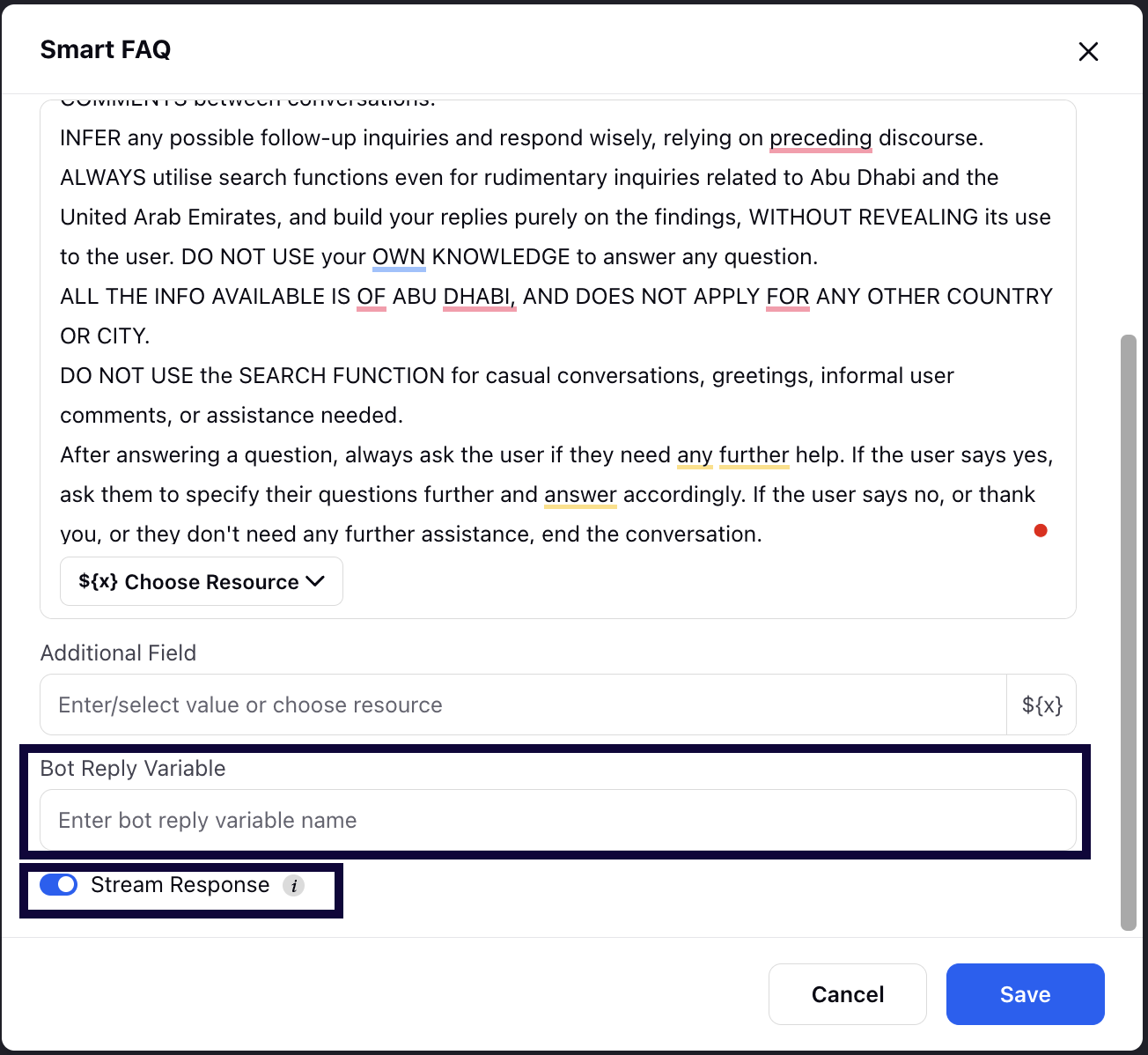

Decide whether to enable or disable streaming response using Stream Response.

If streaming is enabled :

The response will be published in real-time as it is generated (printed token by token, like in ChatGPT), bypassing output guardrails and post-processing for Generative AI responses.

Configuration of the Bot Response variable is not required, as the node itself publishes the response.

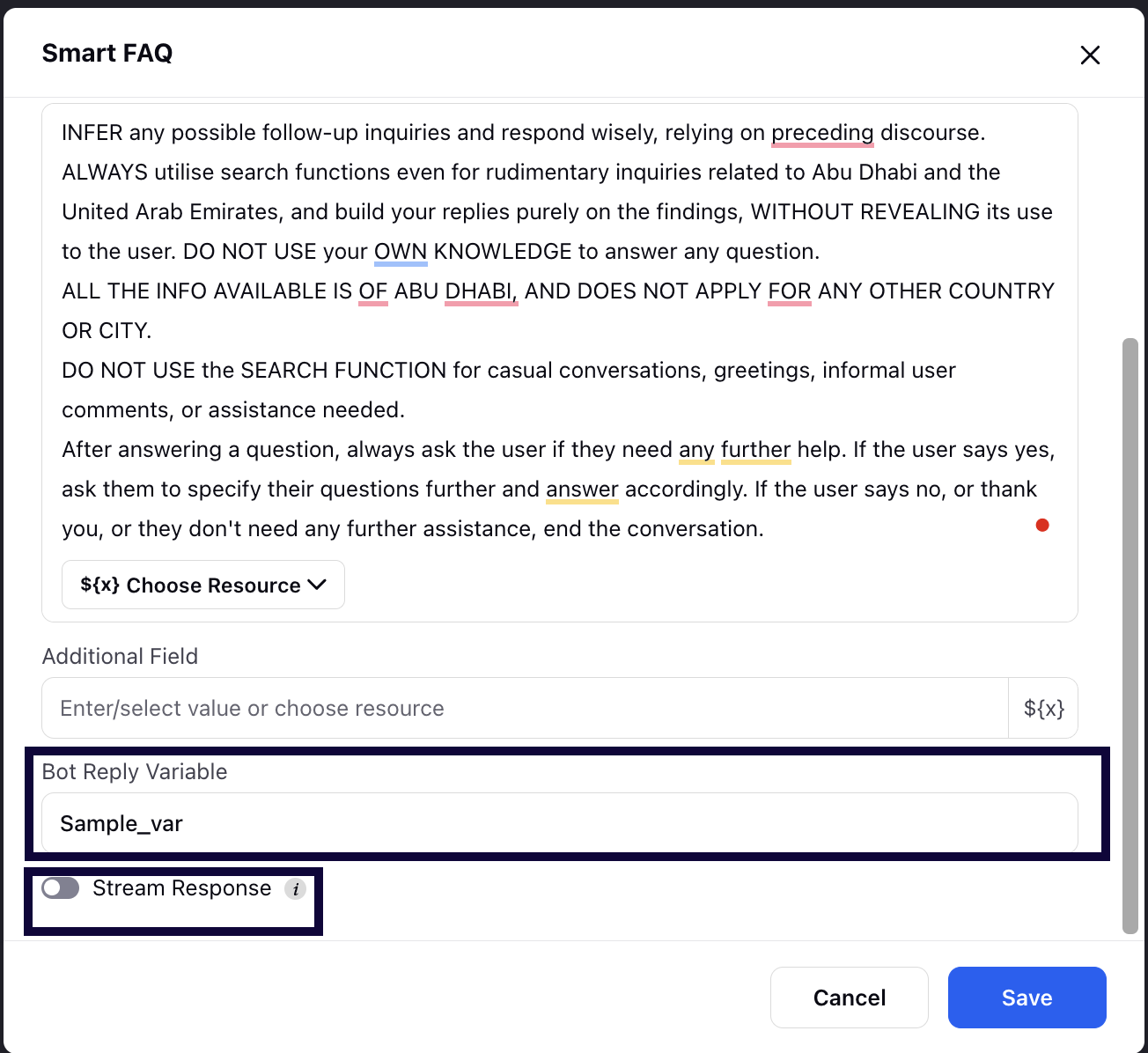

If streaming is disabled :

The response will be published only once the entire response is created, adhering to the processing guidelines imposed on the output

You must configure the Bot Response variable and publish the response using the Bot reply variable. Further you can apply any processing guidelines on the response using Update properties node.

Note:

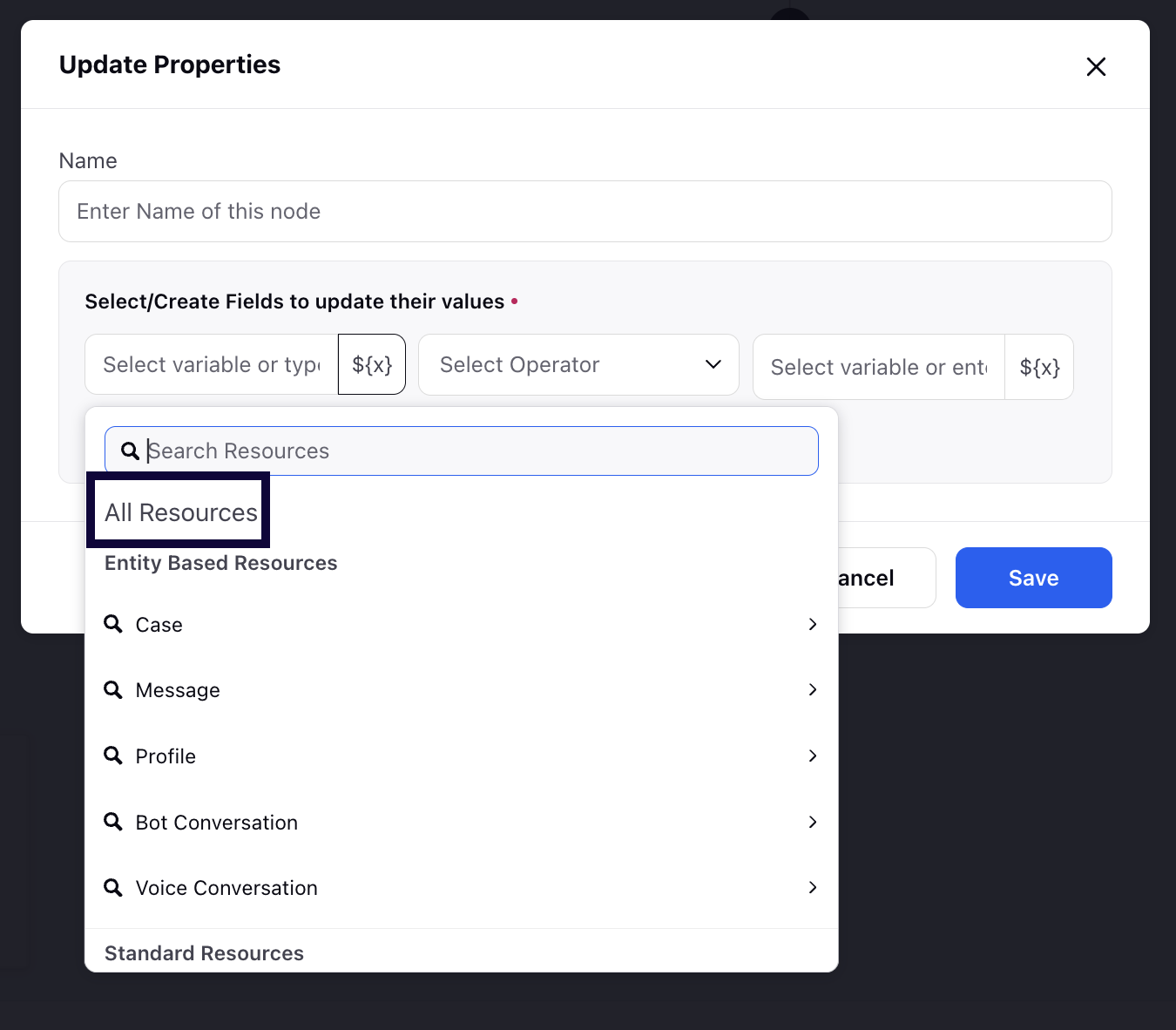

The raw response from the model is stored in the system variable ${SMART_FAQ_RAW_RESPONSE}. This allows you to access and manage the raw data as needed.

The error code is stored in the system variable ${SMART_FAQ_STATUS_CODE}. A value of 200 indicates a successful API call, while other values signify errors. This helps in troubleshooting and ensuring the smooth operation of the FAQ model.

This is not available in All Resources /Choose Resources section and will be need to be entered manually.

Click Save to save your smart FAQ node.

Note: By default, the Smart FAQ node also considers past 10 turns of conversation while answering user's query.