What is Peer-to-Peer Calibration?

Updated

You can create a Peer-to-Peer Calibration flow, bringing together a group of auditors to collectively evaluate a single case. These evaluations are conducted on new cases with no existing evaluations, aiming to calculate the variance among different P2P auditors.

Note: The Primary Evaluator is allowed to edit their evaluation a maximum of three times during an ongoing Peer-to-Peer (P2P) calibration process.

Variance Calculation Scenarios

Primary Evaluator

Variance is calculated in comparison to the assessment provided by a designated Primary Evaluator. This approach provides insights into individual auditor deviations from the primary reference.

Mean

Variance is determined concerning the average (mean) of all P2P audits. This method helps gauge how each auditor's evaluation differs from the collective average.

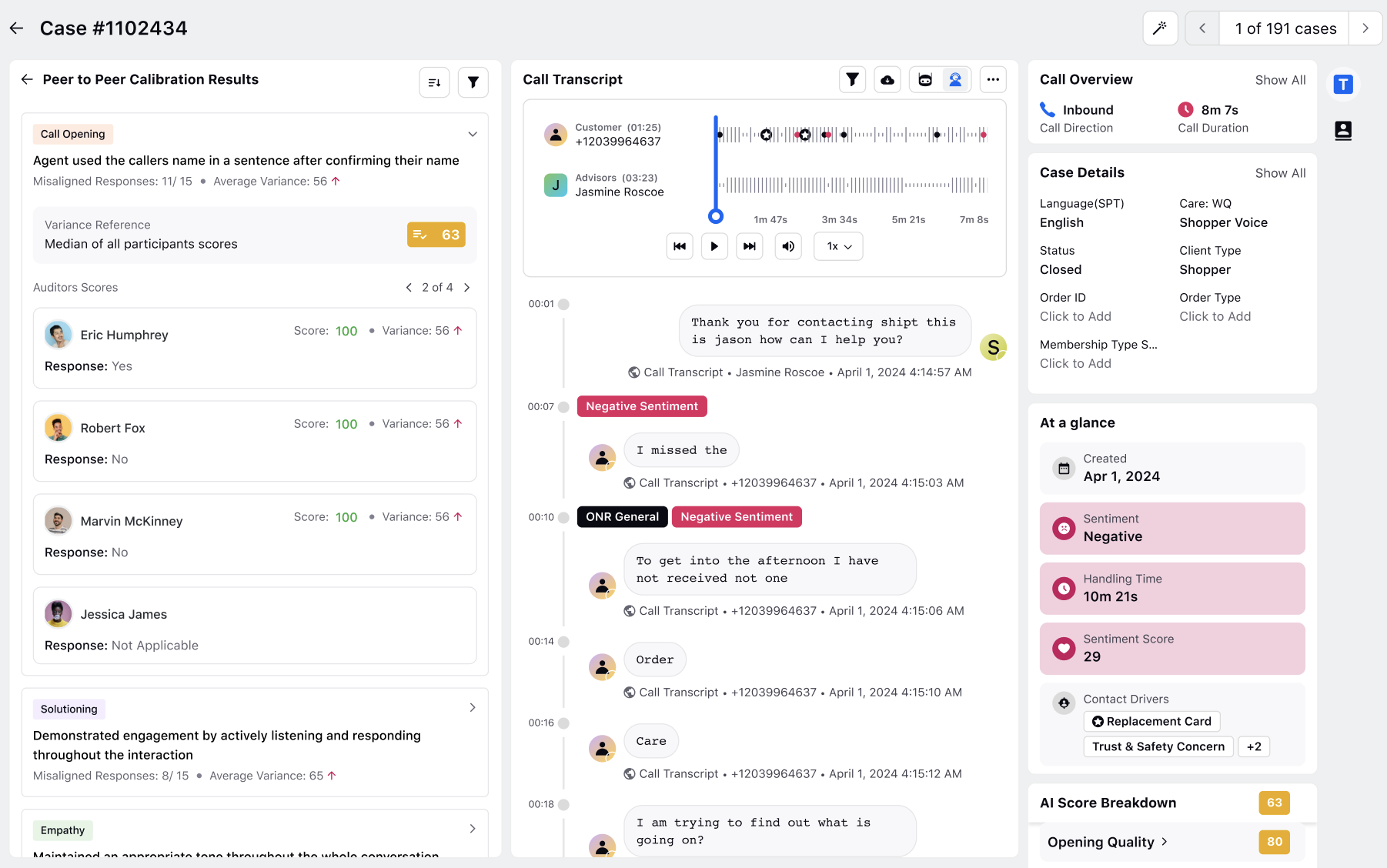

Median

Variance is computed in relation to the median of all P2P audits. The median is the middle value in a dataset. Utilizing the median as a reference point offers a robust measure, particularly in scenarios where extreme values might skew the mean.

By implementing these variance calculation scenarios, you can comprehensively assess and manage the consistency of P2P evaluations, facilitating a more accurate understanding of auditor performance.

Performing Peer to Peer Calibrations

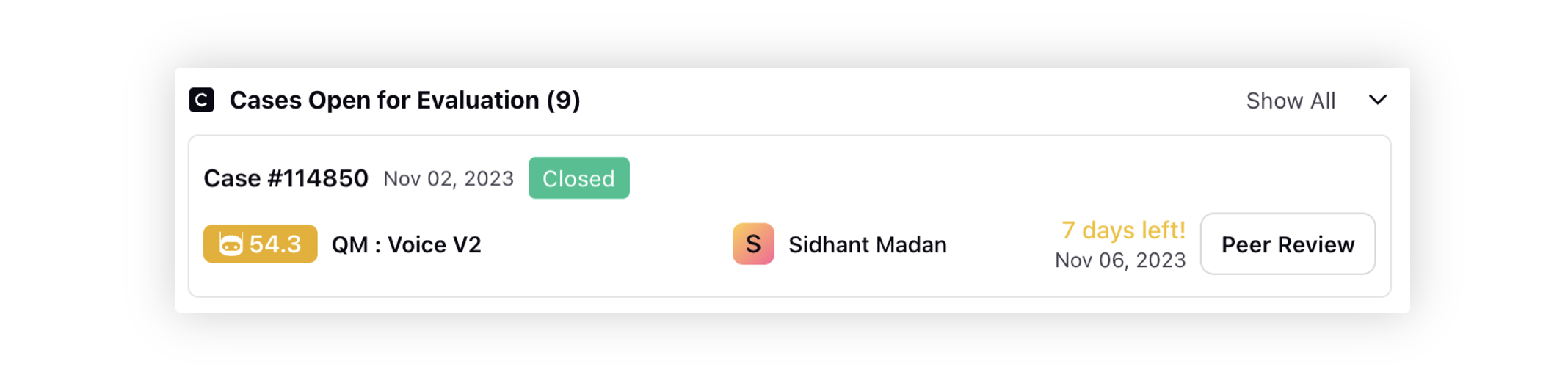

Post a P2P calibration evaluation is assigned, the cases appear in the auditor home screen in Cases Open for Evaluation widget as shown below.

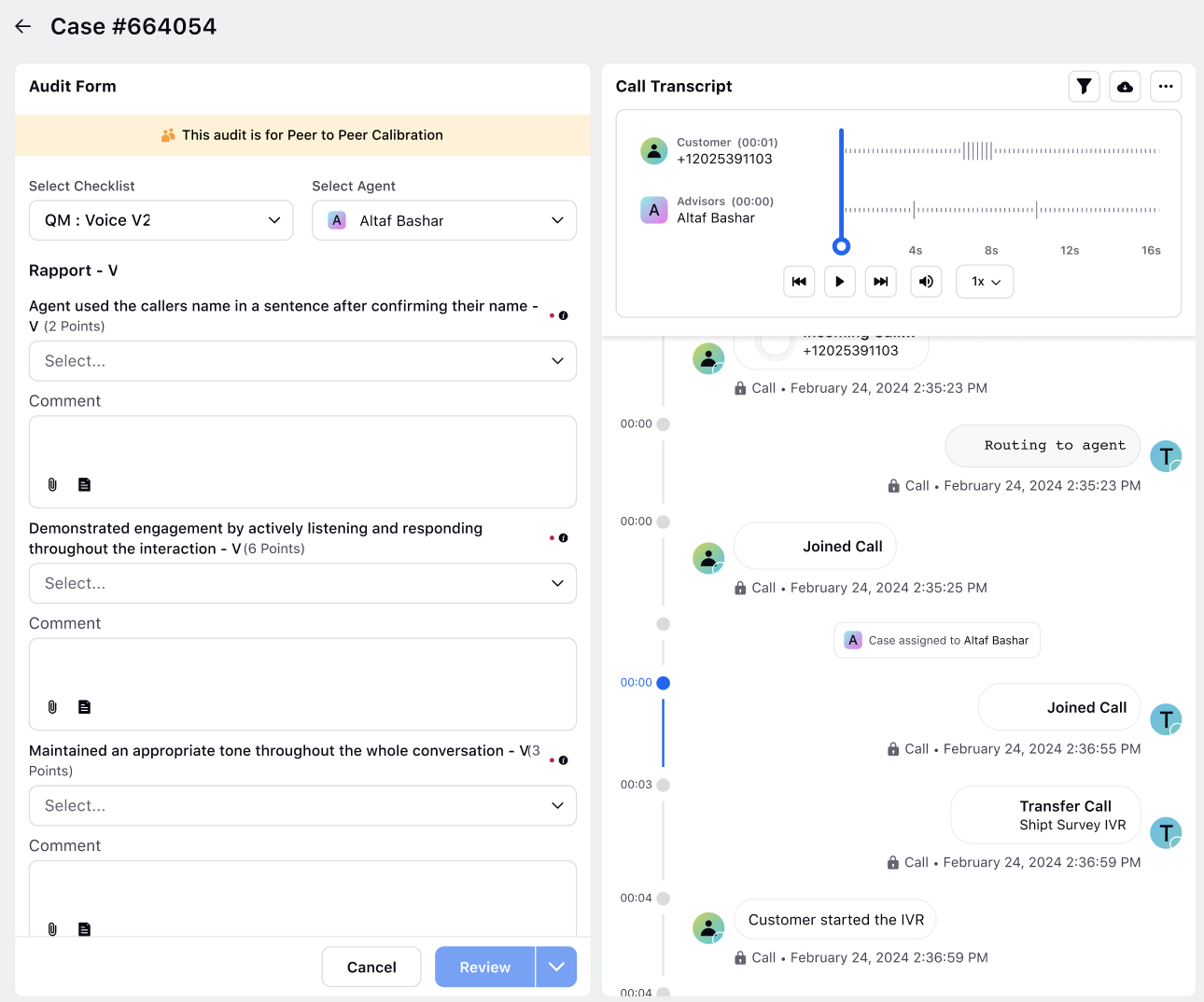

Clicking on Peer Review takes you to the Case Analytics page with the open evaluation form view. Upon landing on the new case interaction where the audit is performed, the user sees a notification at the top indicating whether the assigned audit is a P2P calibration audit.

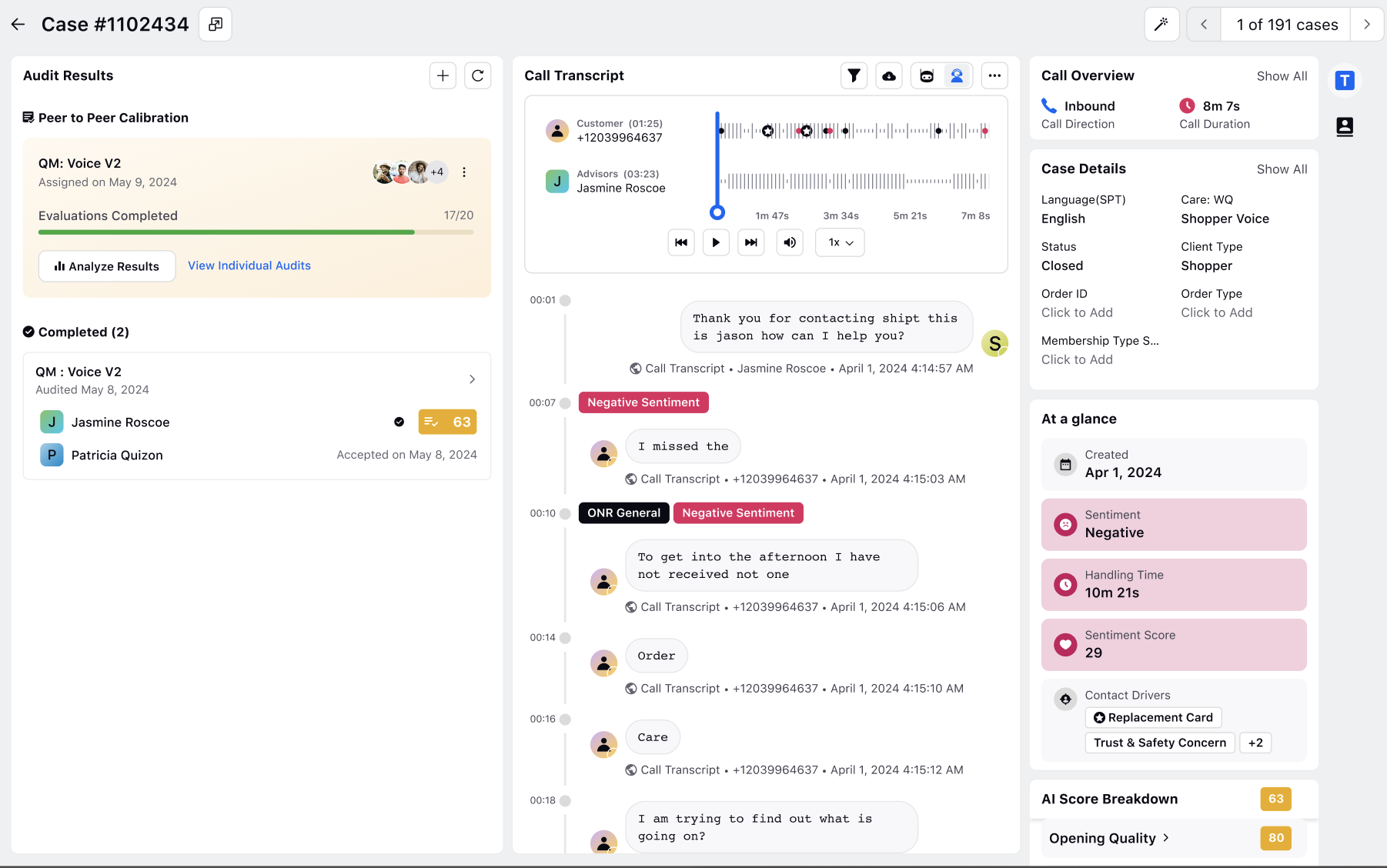

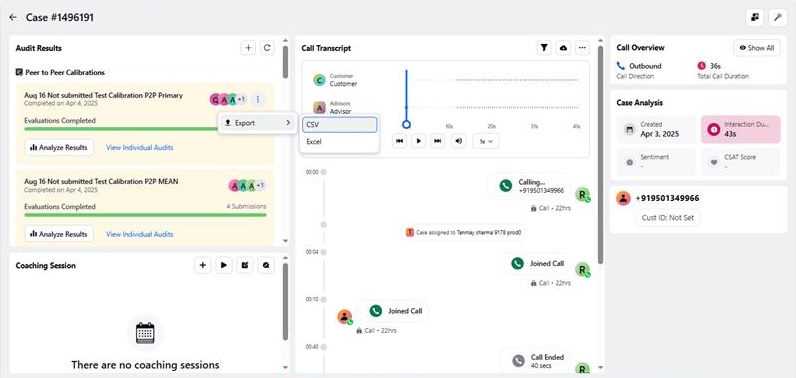

Peer-to-Peer Calibration Card

The Peer-to-Peer Calibration card provides detailed insights and allows for a deep dive into the calibration process. Here’s an in-depth explanation of its features and functionalities:

Note: Sorting in multiple Peer-to-Peer evaluation card happens in two ways:

First sort Peer-to-Peer Evaluation happens by % Openness.

If there is a tiebreaker then sort happens based on the Assigned Time. The one which is assigned first is displayed first and the one assigned at the last gets displayed second.

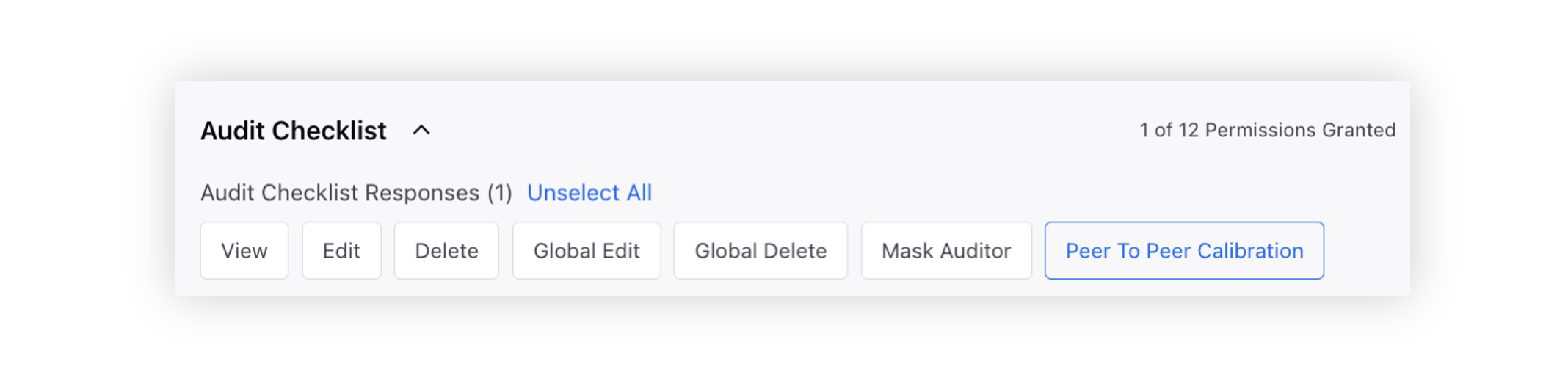

User Permissions

To access the scores given by peer calibrators, users must have the Peer to Peer Calibration permission under Audit Checklist. This ensures that only authorized users can view detailed calibration data.

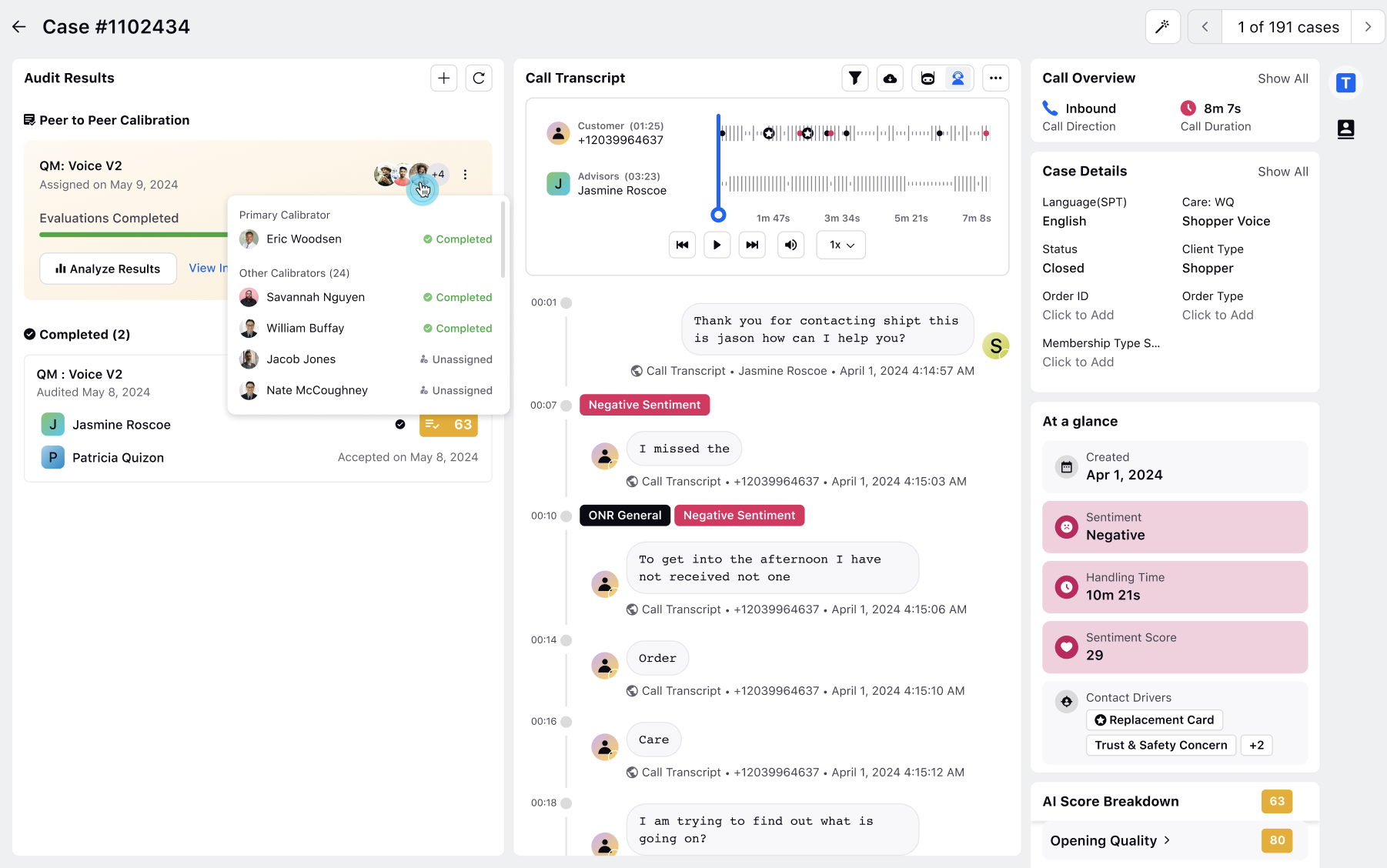

Calibrators Details

Hovering over user icons provides a list of primary calibrators and other calibrators, along with their completion status (completed, unassigned, etc.).

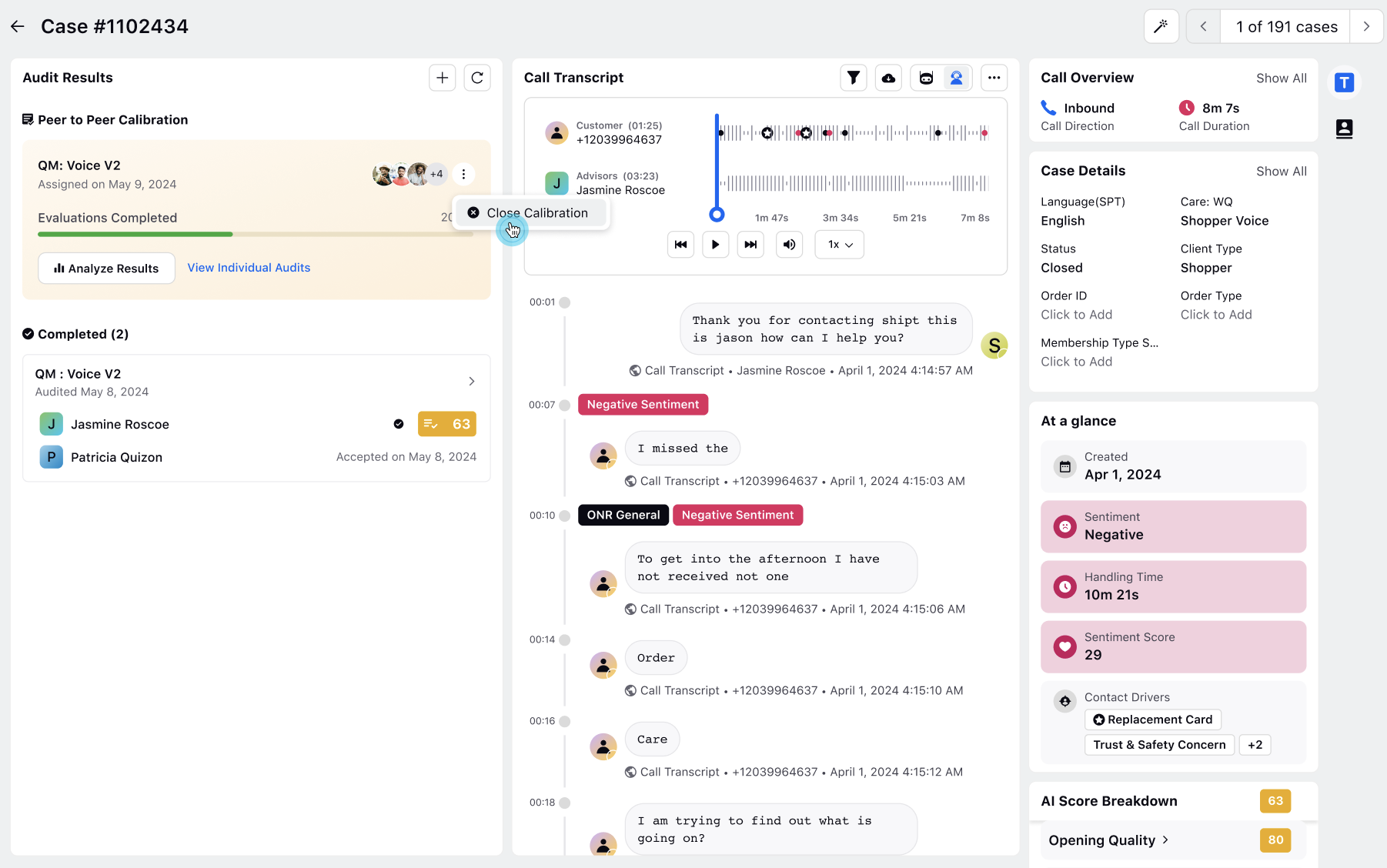

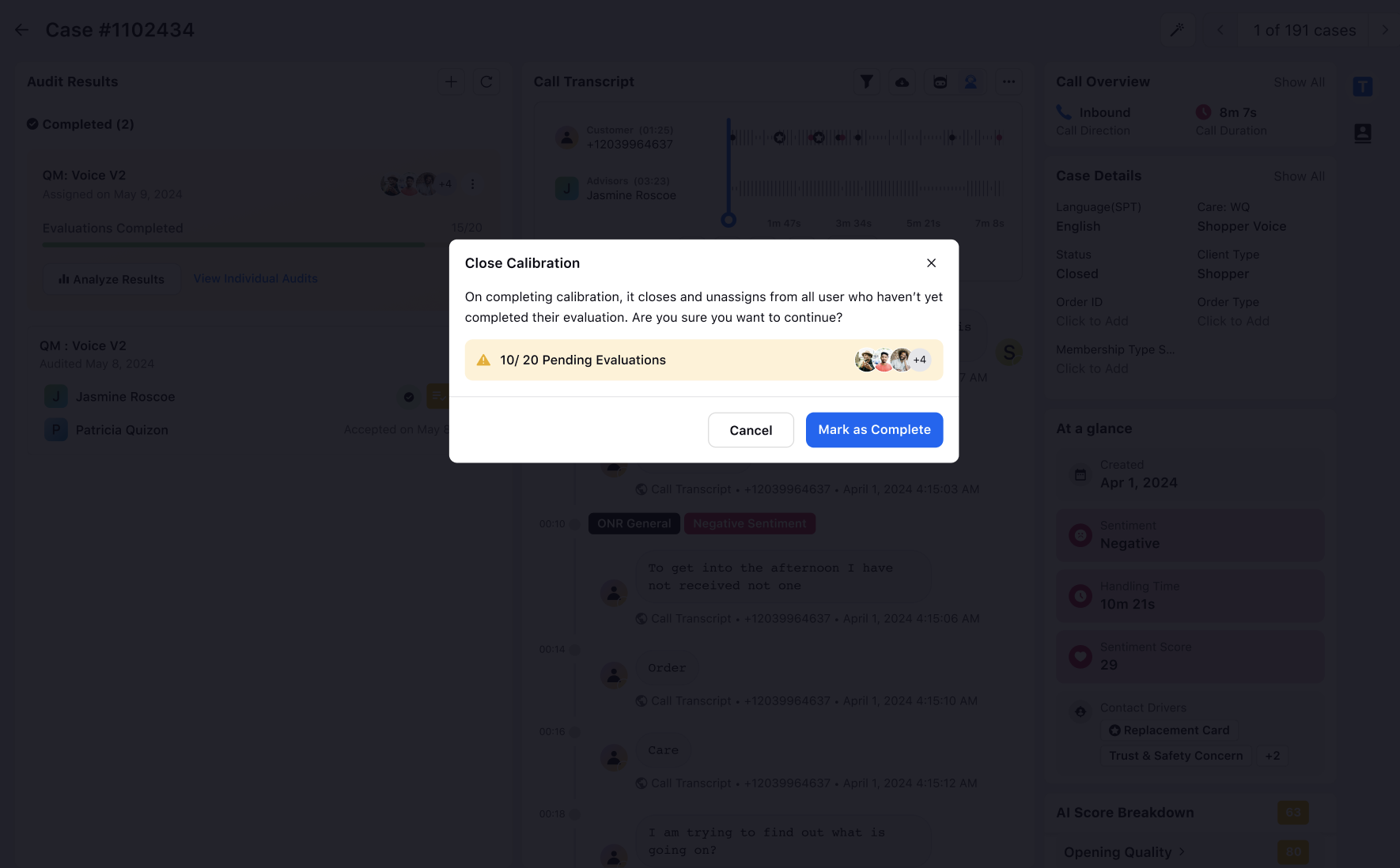

Close Calibrations

Users can close calibration by hovering over the Options icon on the card and selecting "Close Calibration." This action unassigns all users who have not yet completed their evaluation.

Analyzing Results

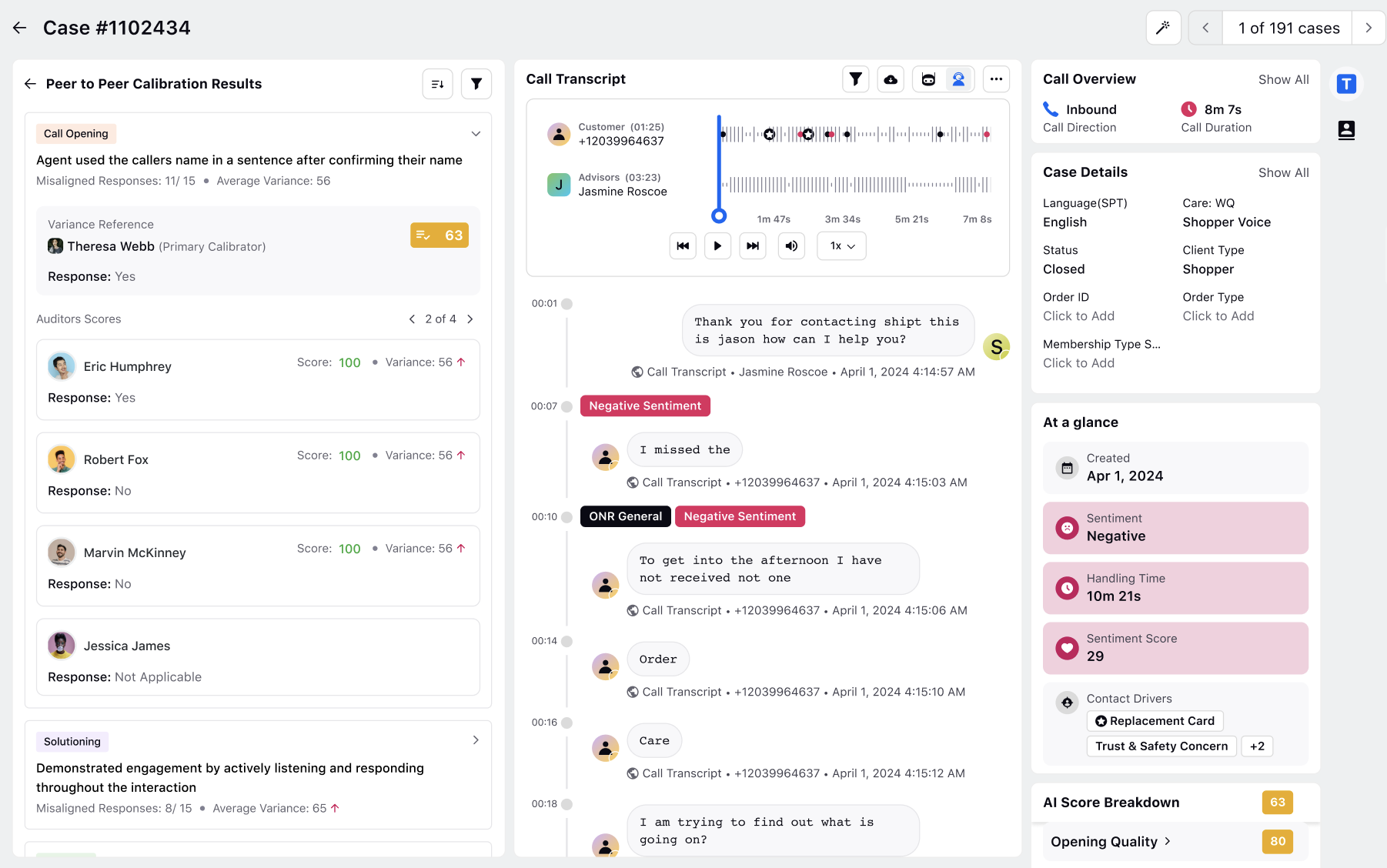

Click "Analyze Results" to view detailed calibration results, including misaligned responses and average variances for all questions. You can identify the categories, items and auditors with the highest variances in scores, enabling a targeted approach to addressing inconsistencies. Click on any specific question to view detailed calibrator-wise insights and scores. This allows to see how each calibrator evaluated the question, providing transparency and identifying any discrepancies in scoring.

Primary Calibrator: Results cannot be analyzed until the primary calibrator has completed their evaluation. Variances update in real-time as calibrators submit their evaluations.

Mean and Median Scores: Analysis of the mean and median results cannot be conducted until all evaluations for assigned calibrators are completed.

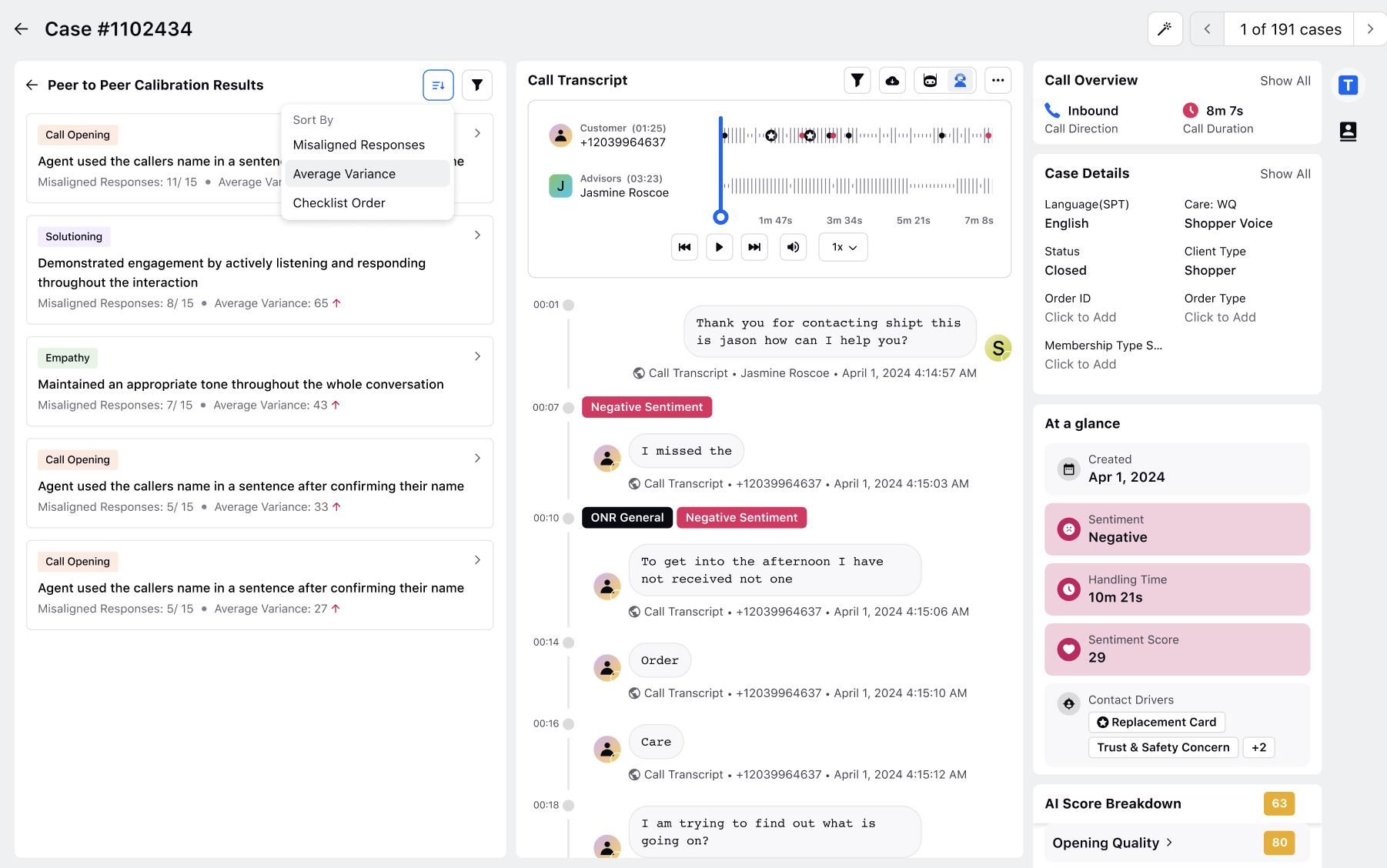

Sorting Options

Calibration results can be sorted by Misaligned Responses, Average Variance, and Checklist Order.

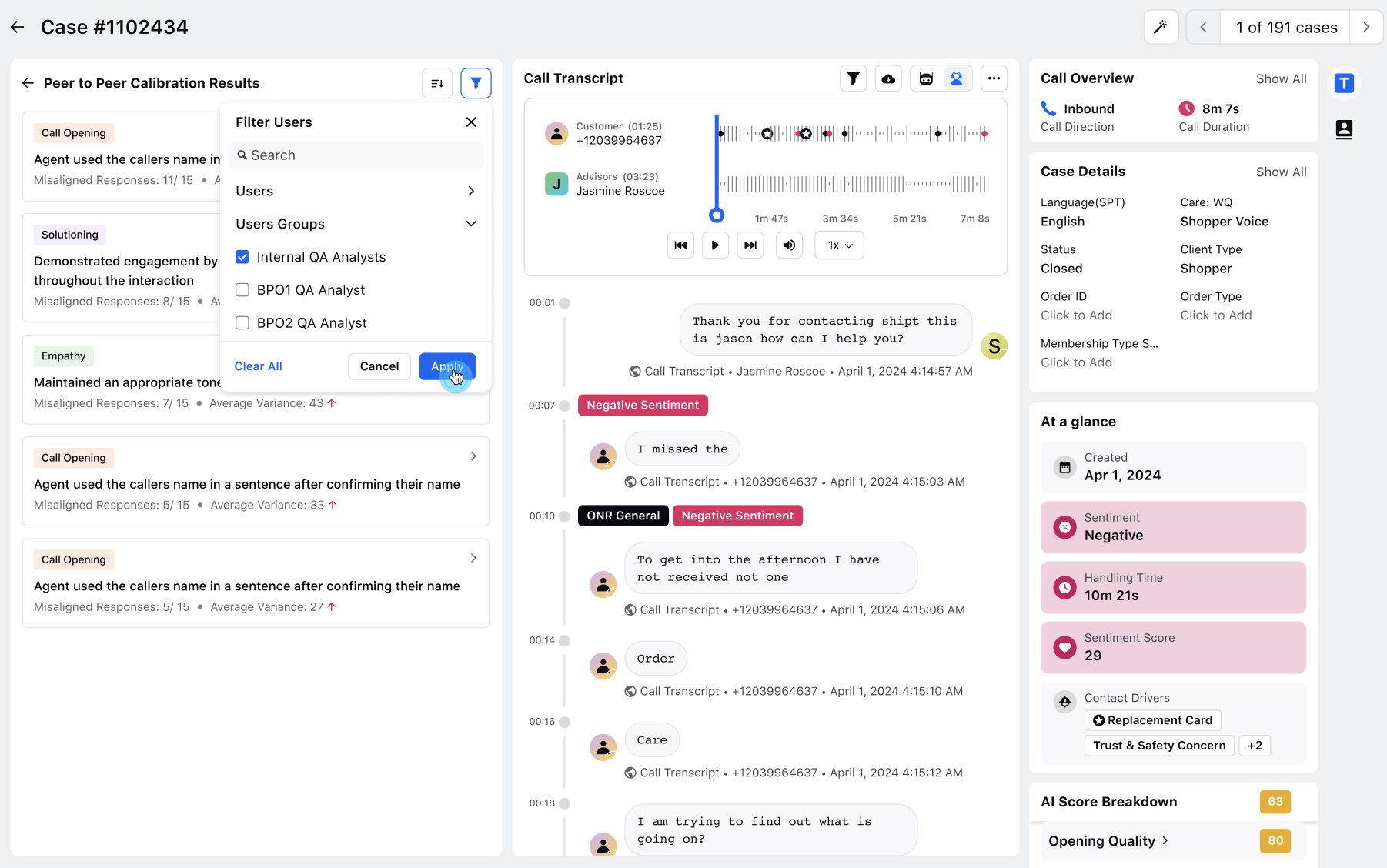

Filter Calibrators

Results are filtered by calibrators and their groups, providing a customized view of the data.

Note: Users have an export option directly from the P2P analysis card, simplifying the process and reducing dependencies on manual reporting updates. The export functionality is available for audits created using Case checklists and allows users with appropriate export permissions to download results in both CSV and Excel formats.

After clicking on Export (The Export field appears only once the P2P calibration gets completed), appearing under the More option (represented by three dots), users are presented with two file format options, the file format will be same and cannot be changed and first audit properties appear and then custom fields:

CSV: The corresponding metrics and dimensions for the audit type is downloaded as a CSV file. The file is named Checklist.csv.

Excel: The corresponding metrics and dimensions for the audit type is downloaded as an Excel file. The file is named Checklist.xlsx.

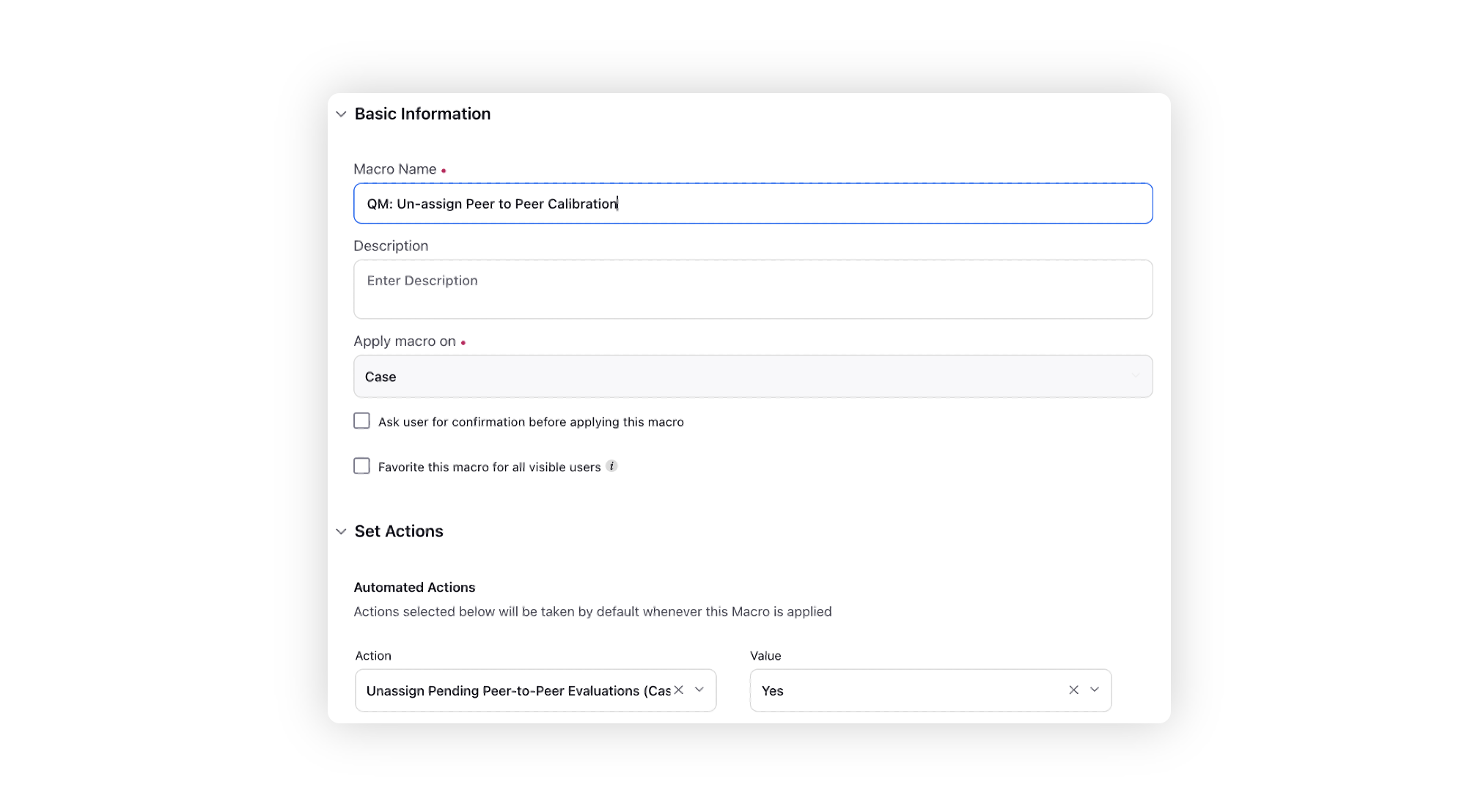

Unassigning Pending Peer-to-Peer Evaluations with Macros

Streamline your workflow by using the "Unassign Pending Peer-to-Peer Evaluations" macro, especially when some of the assigned auditors are on leave or unavailable. This ensures that P2P evaluations are completed efficiently, allowing you to check final scores with ease.

For instance, if 20 auditors have been assigned a case for peer-to-peer (P2P) evaluation, and only 10 have completed while the rest 10 are on leave, you can utilize the macro with the "Unassign Pending Peer-to-Peer Evaluations" action for those remaining 10.