Multiple Agent Scoring

Updated

In conversations where multiple agents participate, evaluating individual contributions accurately can be challenging. To address this, Sprinklr has introduced enhancements to its AI Scoring mechanism, allowing quality managers to evaluate each agent separately with greater flexibility and precision, ensuring an accurate assessment of individual contributions in multi-agent interactions.

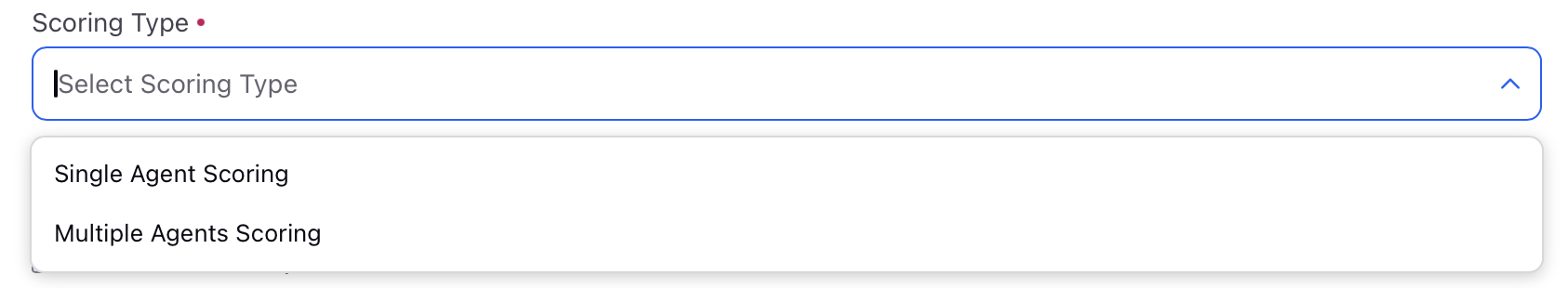

A new Scoring Type field has been added at the checklist level within the overview section, enabling users to define how scoring should be applied. This feature offers two options:

Single Agent Scoring, where scores are attributed to a specific agent (For example, the first engaged agent, most engaged agent, last engaged agent, or based on User Level Case custom criteria).

Multi-Agent Scoring, which allows for the evaluation of each agent individually. For example, an organization can now assess whether all agents in a conversation demonstrated courtesy or limit the evaluation of greetings to only the first agent. These updates ensure that performance evaluations are more tailored and relevant, providing actionable insights into team dynamics and individual contributions in multi-agent interactions.

Note: Access to this feature is controlled by DP - AI_QM_MULTI_AGENT_SCORING_ENABLED dynamic property. To enable this feature in your environment, reach out to your Success Manager. Alternatively, you can submit a request at tickets@sprinklr.com.

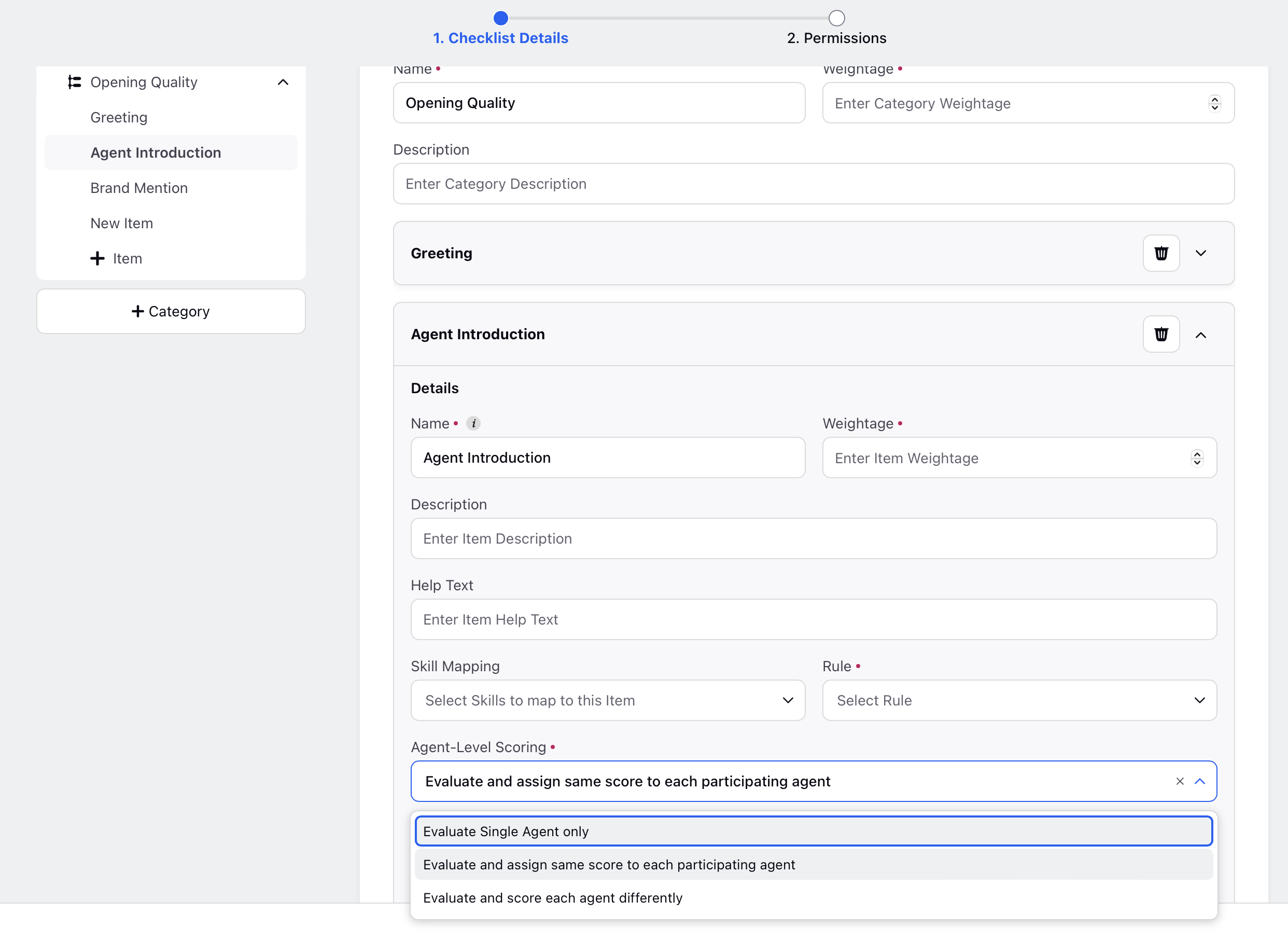

Adding Agent-Level Scoring in Cases with Multiple Agents

Click the New Tab icon. Under Platform Modules, click All Settings within Listen.

Search and select Audit Checklists.

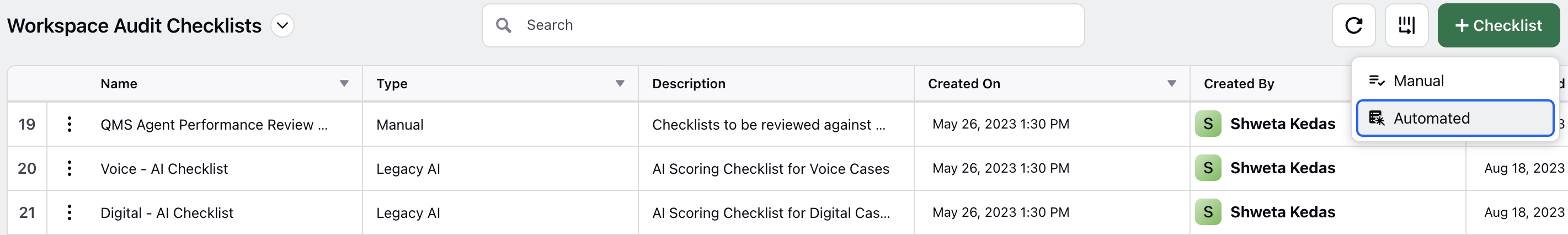

In the top right corner of the Audit Checklists window, click + Checklist and then select Automated.

You can also Edit an existing checklist.

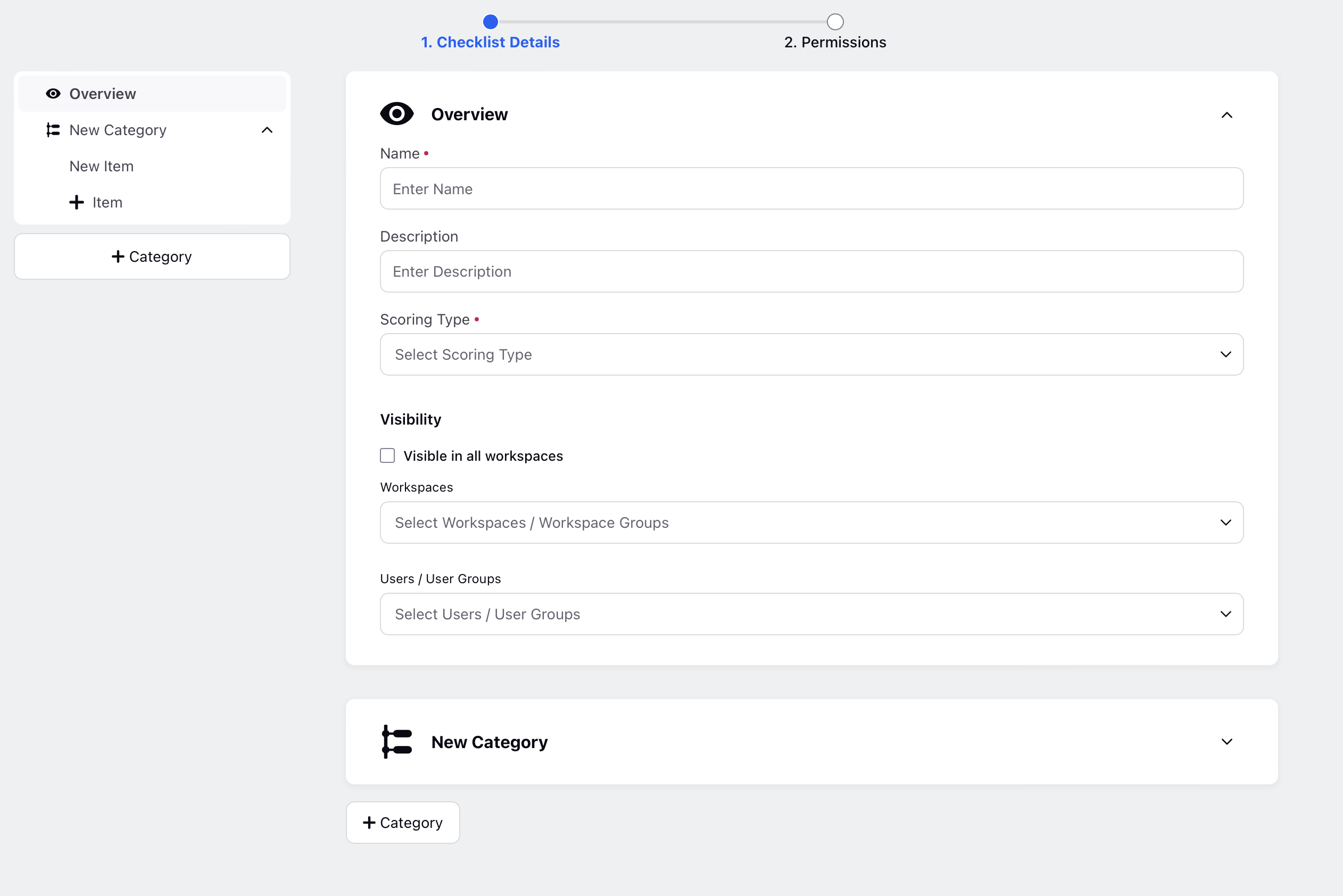

The checklist details screen will open. You can enter the Name and Description.

The Scoring Type (a mandatory field) field can have two possible values:

Note: For other fields, refer to Automated Checklist Configuration - Field Description .

Single Agent Scoring

If you choose this field, it means scoring is applied to only one agent within a multi-agent conversation.

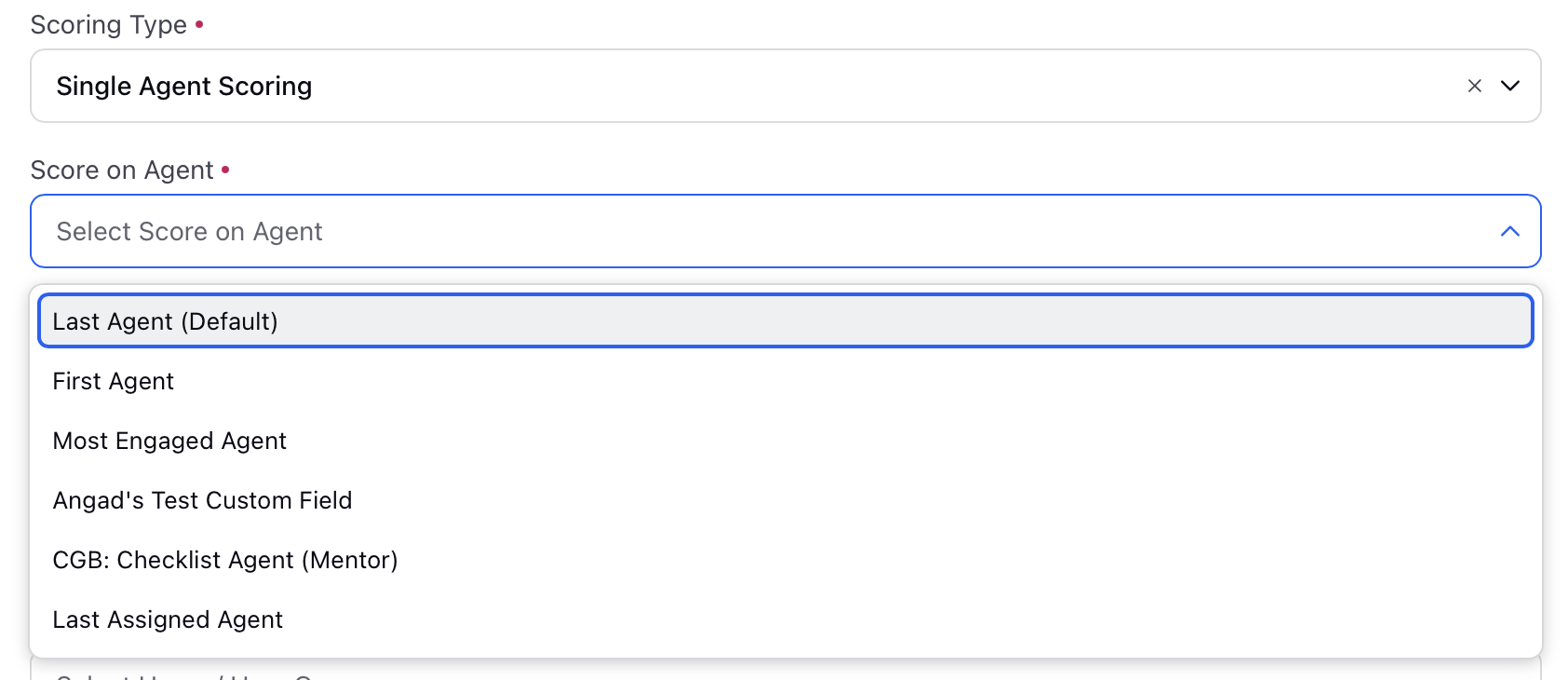

Additional Field: Score on Agent

If you select Single Agent Scoring, a new field, Score on Agent, becomes visible at the checklist level. It is a mandatory field.

This field allows you to specify which agent should be scored, with the following possible options:

First Engaged: Assign the score to the first agent who engaged within the interaction.

Last Engaged: Assign the score to the last agent who engaged within the interaction.

Most Engaged: Assign the score to the agent who has the most number of messages within the interaction.

User Custom Field: Assign the score to the custom field user.

For Example, if you select the Most Engaged Agent option under Score on Agent, the scoring will automatically be attributed to the agent who had the highest overall engagement in the conversation. No changes are required at the item level; all items in the checklist will be evaluated based on the entire conversation, and the score will be assigned to the most engaged agent. This ensures that the agent who played the most significant role in the interaction, regardless of when they participated, is appropriately scored for their contributions.

Multiple Agents Scoring

If you select Multi-Agent Scoring, the scoring process becomes more detailed and agent-specific, with scoring being introduced at the item level. Based on your selection, the item score will be assigned differently. Here are the available options for scoring:

Evaluate and Assign Same Score to Each Participating Agent: In this option, the item score will not be evaluated individually for each agent. Instead, the same score will be assigned to all agents who participated in the interaction.

Evaluate Single Agent Only:

When this option is selected, a mandatory field "Score on Agent" will appear at the checklist item level. The item score will be evaluated specifically for the selected agent only. The available choices for this field are:

First Engaged: Assign the score to the first agent who interacted within the interaction.

Last Engaged: Assign the score to the last agent who interacted within the interaction.

Most Engaged: Assign the score to the agent with the most interaction during the conversation.

User Custom Field: Assign the score based on a custom field defined by the user.

Evaluate and Score Each Agent Differently:

In this option, the item score will be evaluated separately for each participating agent, allowing individual scoring based on each agent’s contributions during the conversation.

Note:

In the Single Agent Scoring and Multi-Agent Scoring with Evaluate and Assign Same Score to Each Participating Agent scenarios, scoring is always be based on the entire interaction, regardless of the Consider messages corresponding to setting — this setting gets disregarded.

In contrast, for Multi-Agent Scoring with Evaluate Single Agent Only and Evaluate and Score Each Agent Differently, scoring is default to using only the messages from the evaluated agent. However, if the Consider messages corresponding to setting is explicitly set to Entire Interaction, then all messages in the interaction are included in the evaluation.

Agent Attribution Logic in Scoring Actions

Agent attribution across scoring actions is determined based on the selected evaluation mode and the "Consider messages corresponding to" setting. Here's how messages are attributed in different scoring scenarios:

ML Output Attribution

AI Scoring has become more context-aware by leveraging full conversation analysis and attributing scores to agents based on proof messages identified by the ML model. When scoring is triggered, the entire interaction is processed by the model, which detects whether specific actions—such as greeting the customer—were performed by each agent involved.

The model then highlights relevant proof messages for each agent to justify the scoring decision. For instance, in a conversation involving three agents, if only Agent 1 and Agent 3 greeted the customer, they will receive full scores based on their proof messages. Agent 2, who did not perform the greeting, will receive a score of zero.

This proof-driven approach enables more precise and equitable scoring by directly tying agent performance to verifiable actions within the conversation.

Fixed Score Adjustments (SET / UNSET / INC / DECR)

These operations follow the current evaluation flow and do not require special handling:

For example, in "Evaluate and Score Each Agent Differently", the score is applied to the agent currently being evaluated in the execution path.

Generate Score Based on Matched Messages

When Matched Message is from the Agent:

Evaluate Single Agent Only: Only the matched messages from the selected agent (as per the checklist item) are used unless "Consider messages corresponding to" is set to "Entire Interaction".

Evaluate and Score Each Agent Differently: For each agent, only their own matched messages are used unless "Entire Interaction" is explicitly selected.

When Matched Message is from the Customer:

Customer messages are attributed to an agent only if they are mapped to the agent currently being evaluated.

If "Entire Interaction" is selected, all matched customer messages will be considered regardless of mapping.

Generate Score Based on ML Message Insights

Follows the same attribution logic as Generate Score Based on Matched Messages.

Proportionate Scoring

For both numerator and denominator, only the messages associated with the agent being evaluated are considered.

This behavior can be overridden if "Consider messages corresponding to" is set to "Entire Interaction".

Linear Scoring Based on Interaction Fields

Scoring is purely based on interaction-level properties and does not depend on any agent-specific message attribution logic.

Scoring Based on Interruption

This is applicable for Evaluate Single Agent Only and Evaluate and Score Each Agent Differently.

Agent Interruptions: Considered only if the interrupting agent matches the one currently being scored.

Customer Interruptions: Considered only if the customer’s mapped agent matches the agent currently being scored.

Scoring Based on Dead Air Instances

This is applicable for Evaluate Single Agent Only and Evaluate and Score Each Agent Differently.

In both cases, the second party in the conversation is evaluated:

If agent is the second party: Dead air is counted if they are the agent being scored.

If customer is the second party: Dead air is counted only if the mapped agent matches the agent being evaluated.

Scoring Based on Hold Instances

Hold time is attributed to the agent who placed the customer on hold.

Grammar-Based Scoring Models

Includes scoring types like:

Mistakes-to-Words Ratio

Mistakes by Error Category

Number of Mistakes

Mistakes-to-Words Ratio by Error Category

Attribution Logic

Single Agent Scoring or Multi-Agent Scoring with Same Score for All: All messages are considered for grammar scoring.

Evaluate Single Agent or Evaluate Each Agent Differently: Only messages from the agent being evaluated are considered — unless "Entire Interaction" is selected, in which case all messages are included.

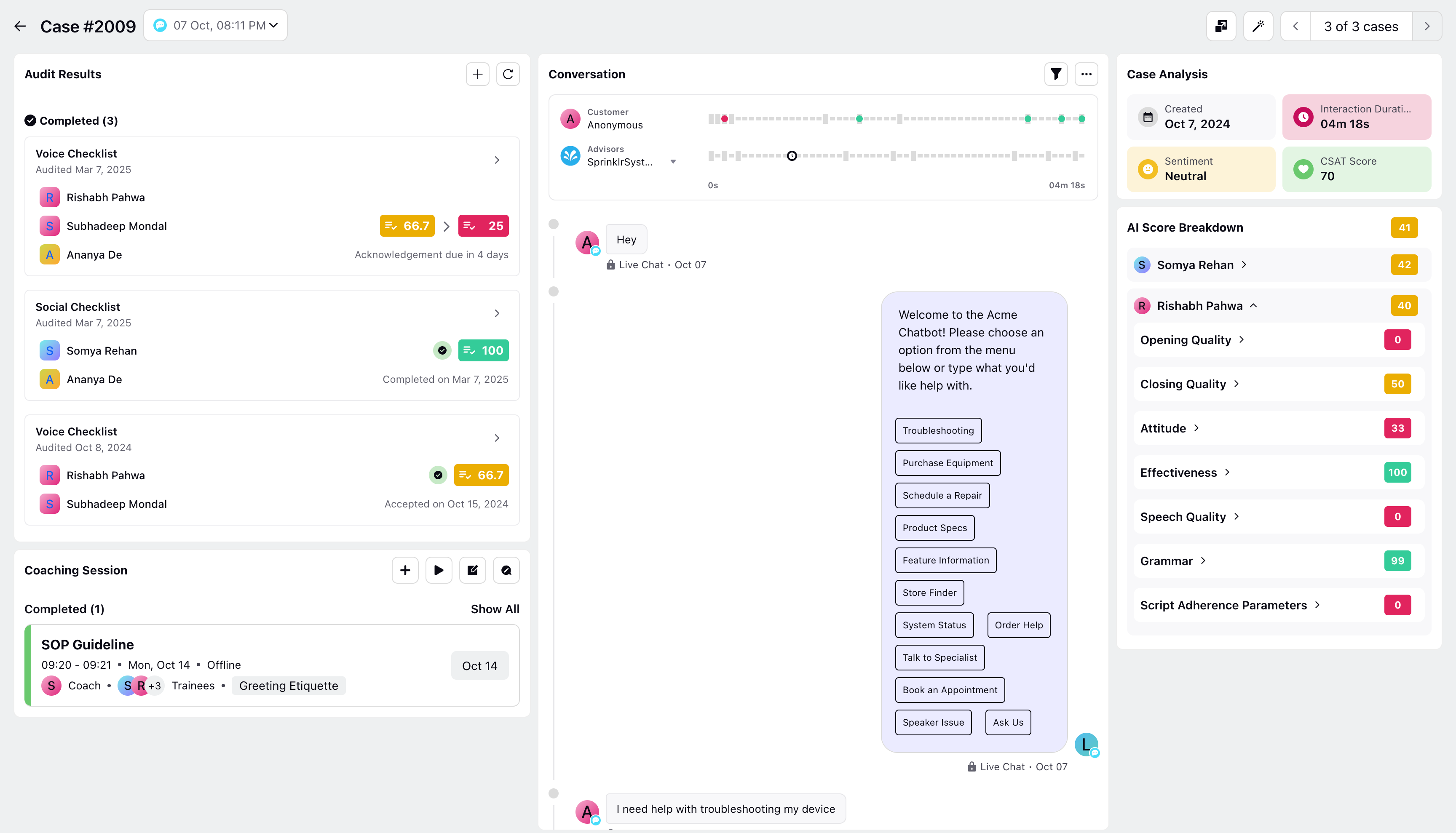

Interaction-Based Visibility in AI Scoring Widget

A visibility logic is implemented in the AI Scoring Widget to ensure users only access evaluation data relevant to their permissions.

Note: Access to this feature is controlled by (Dynamic Property) DP - AI_QM_MULTI_AGENT_SCORING_ENABLED and VOICE_RECORDING_SEGMENTS_ENABLED. To enable this feature in your environment, reach out to your Success Manager. Alternatively, you can submit a request at tickets@sprinklr.com.

Access is granted if:

The agent is the logged-in user,

The agent is part of the user's Interaction Visibility (individually or via group), or

The user has the Segmentation View All permission (overrides IV).

Thus, all the logged in users only see the names and scores of those agents which are a part of their visibility field. Additionally, parent issues can only transition to Waiting for Build if all sub-tasks are in an allowed status, maintaining workflow integrity.