Measuring Performance via Golden Test Set

Updated

Before you start, See How to Setup Golden Test Set?

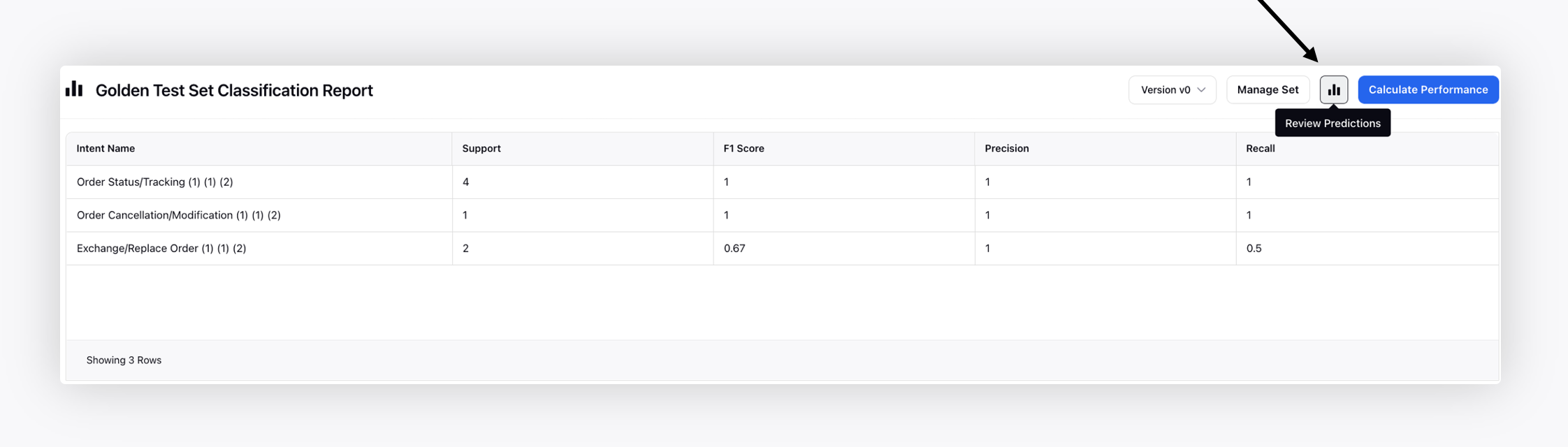

Steps to view performance

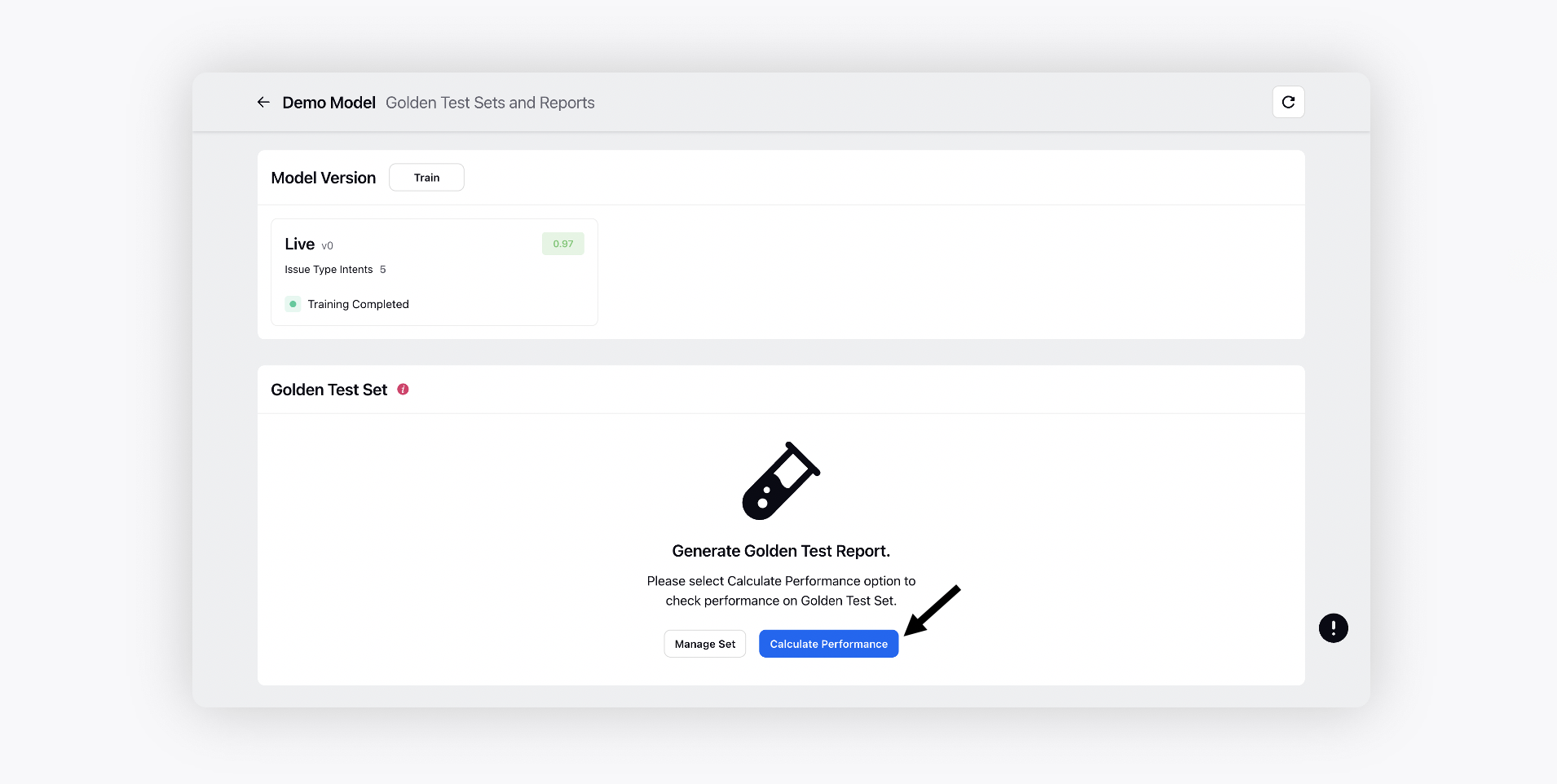

Make sure that the Perfomance Calculation for the golden test has been processed.

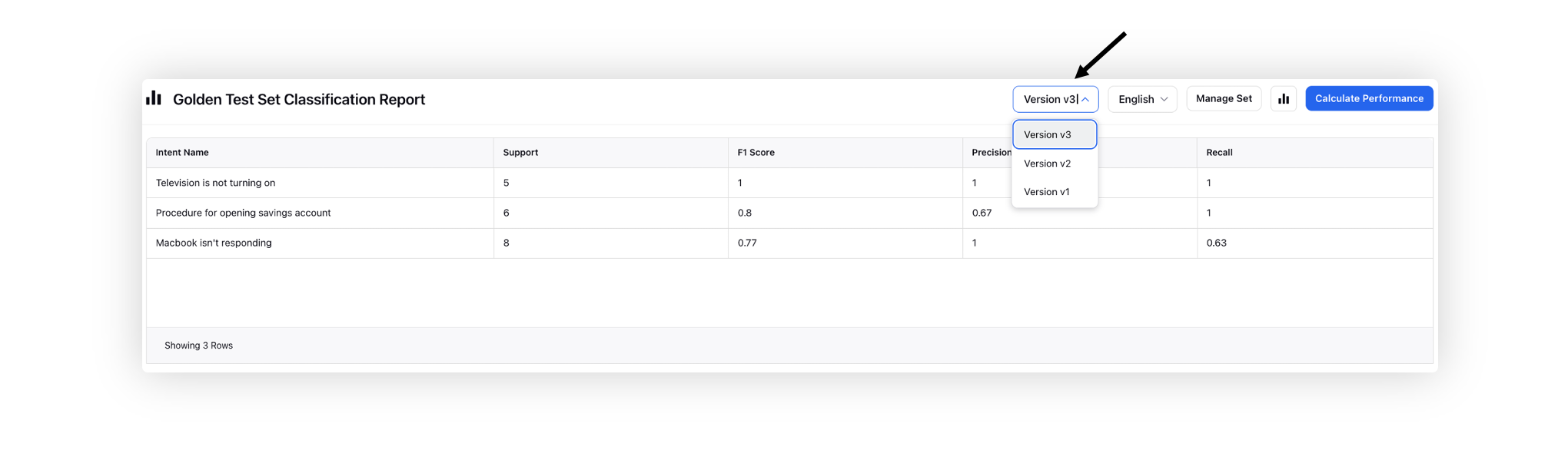

Switch to different versions to view and compare golden test set reports. You will view the following columns for each version.

Support - Number of expressions belonging to intent according to human validation.

F1 Score - Overall accuracy for each intent.

Precision - Fraction of AI model predictions for a particular intent that actually belong to that intent (according to human validation).

Formula - # of correct AI model predictions for intent / total # of expressions predicted by AI model in that intent

Recall - Fraction of expressions belonging to an intent (according to human validation) that the AI model is able to capture correctly.

Formula - # of correct AI model predictions for intent / total # of expressions belonging to intent according to human validation

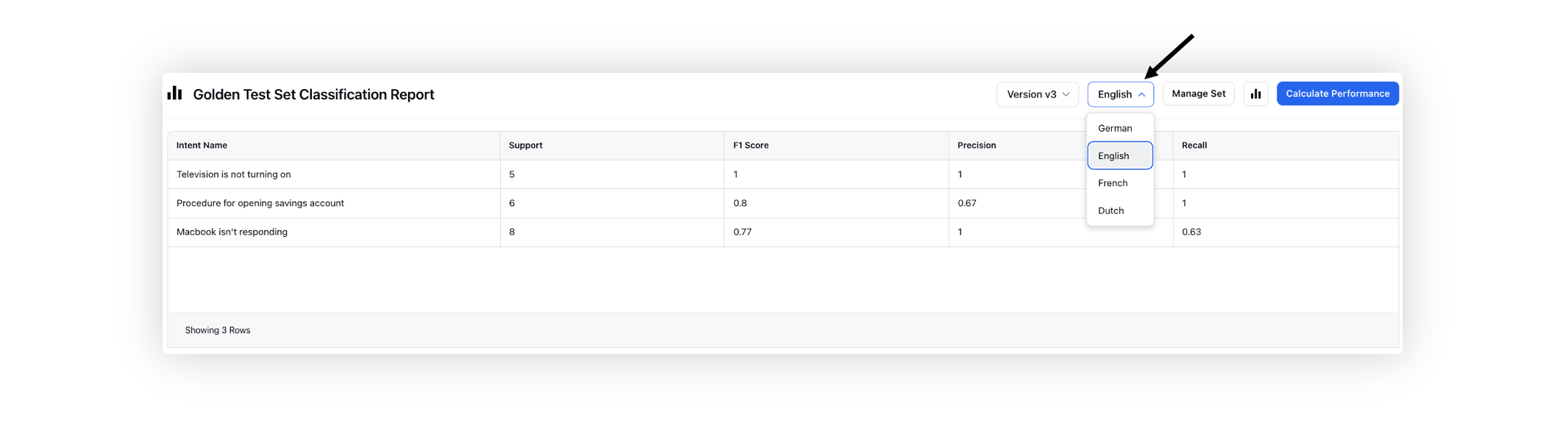

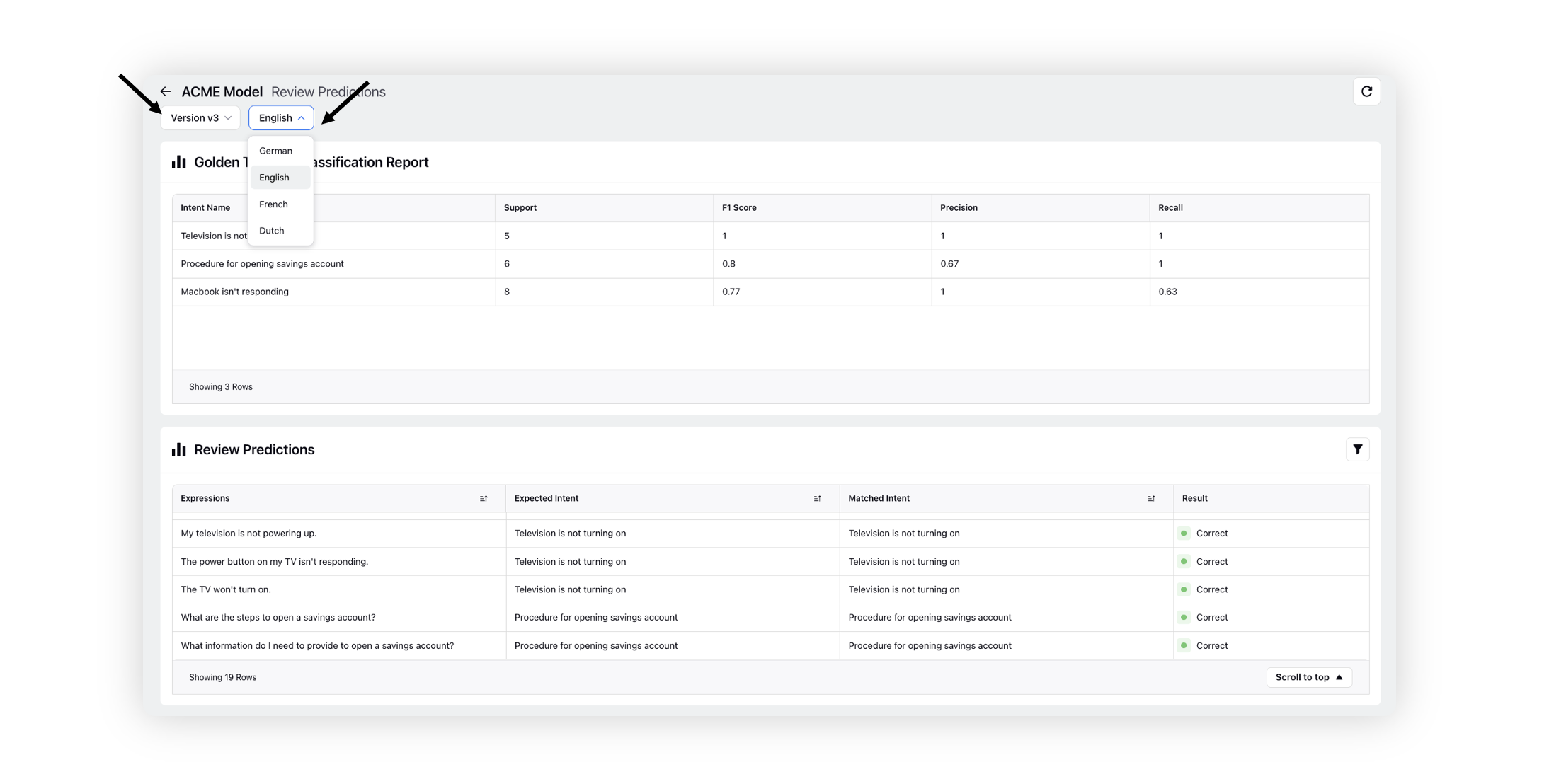

Switch to different languages to view golden test set report.

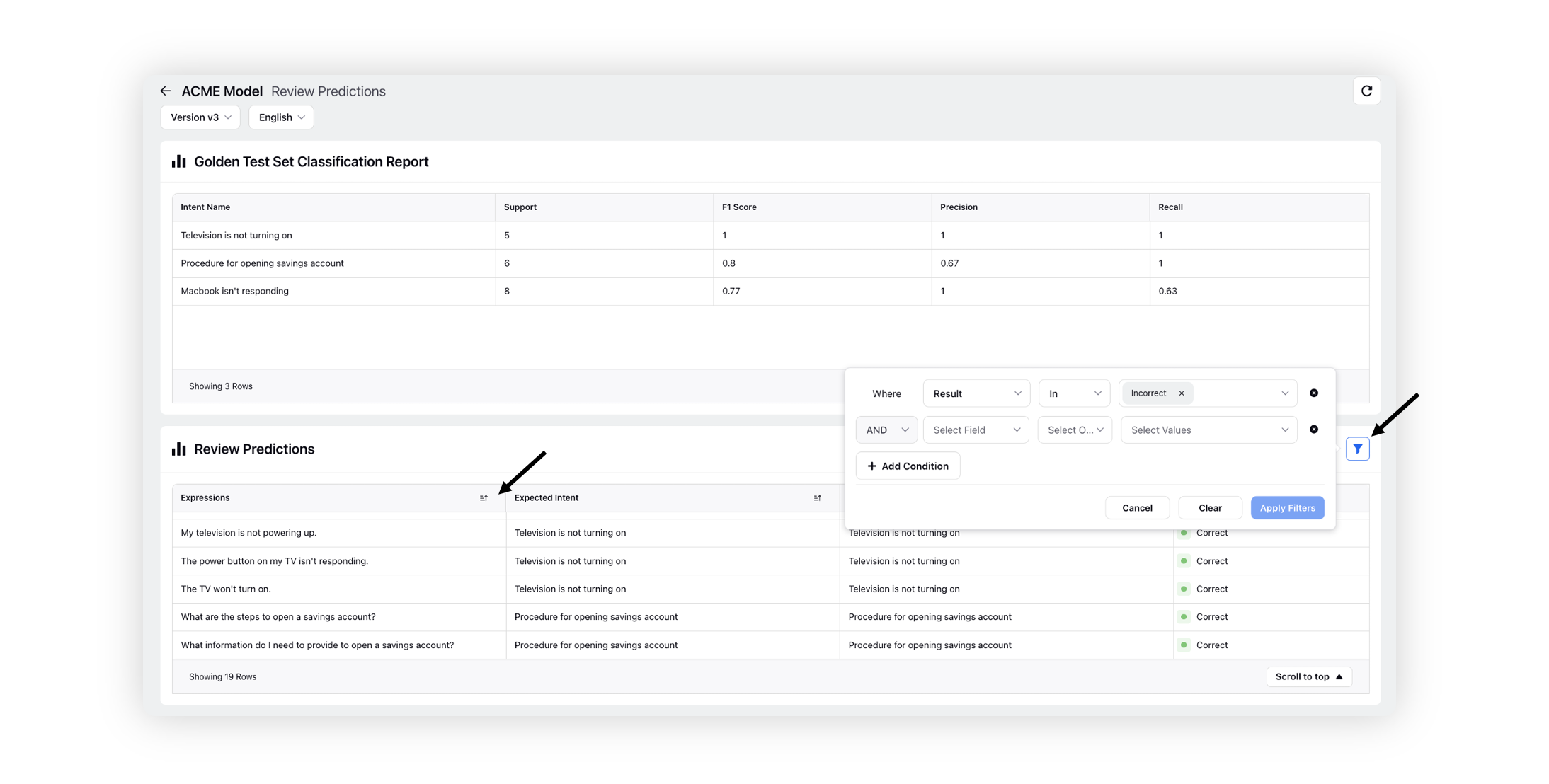

Click Review Predictions to review the predictions of an intent model on individual expressions. Based on this, you can decide which pattern of expressions needs to be added/removed from intents in order to improve model performance.

You can switch to different versions and languages from the top left corner.

Additionally, you have the ability to sort the columns alphabetically and use filters to narrow down the information displayed.