What is a Golden Test Set?

Updated

A golden test set, also known as a gold standard or benchmark test set, is a predetermined collection of test expressions or data points that are carefully curated by human experts. These test expressions represent a diverse range of inputs and cover various scenarios to evaluate the performance and effectiveness of different versions of an AI model.

The purpose of a golden test set is to provide a standardized and objective measure of performance for different iterations or versions of an AI model. By using the same set of test expressions across multiple models, it becomes possible to make fair and meaningful comparisons between their performance.

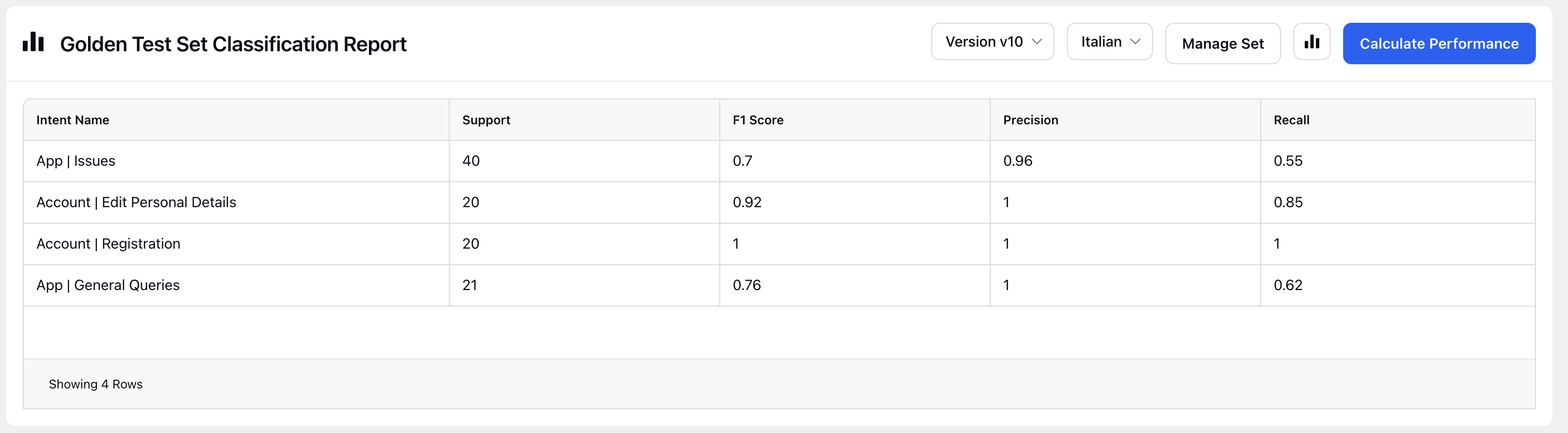

The golden test set allows users to run these different versions of the model and evaluate their performance against the same set of test expressions. By comparing metrics such as accuracy, precision, recall, F1 score, or other relevant evaluation measures, developers can make informed decisions about which version of the model performs better and should be considered for deployment.

Note: When a Conv AI app is cloned, the corresponding intent GTS is duplicated as well, ensuring consistent intent behavior and reducing the need for manual reconfiguration.