Golden Test Sets

Updated

The Golden Test Set feature makes it simple to evaluate how well your Digital Twin is performing.

With this tool, you can compare your Twin's responses to a set of ideal responses for different user queries. This helps you see how accurate your Twin is, especially when it comes to the information stored in your content sources.

By using this feature, you can assess your Twin's performance before you even put it into action. This lets you make any necessary improvements beforehand, ensuring your Twin is as effective as possible.

To Set up the Golden Test Set

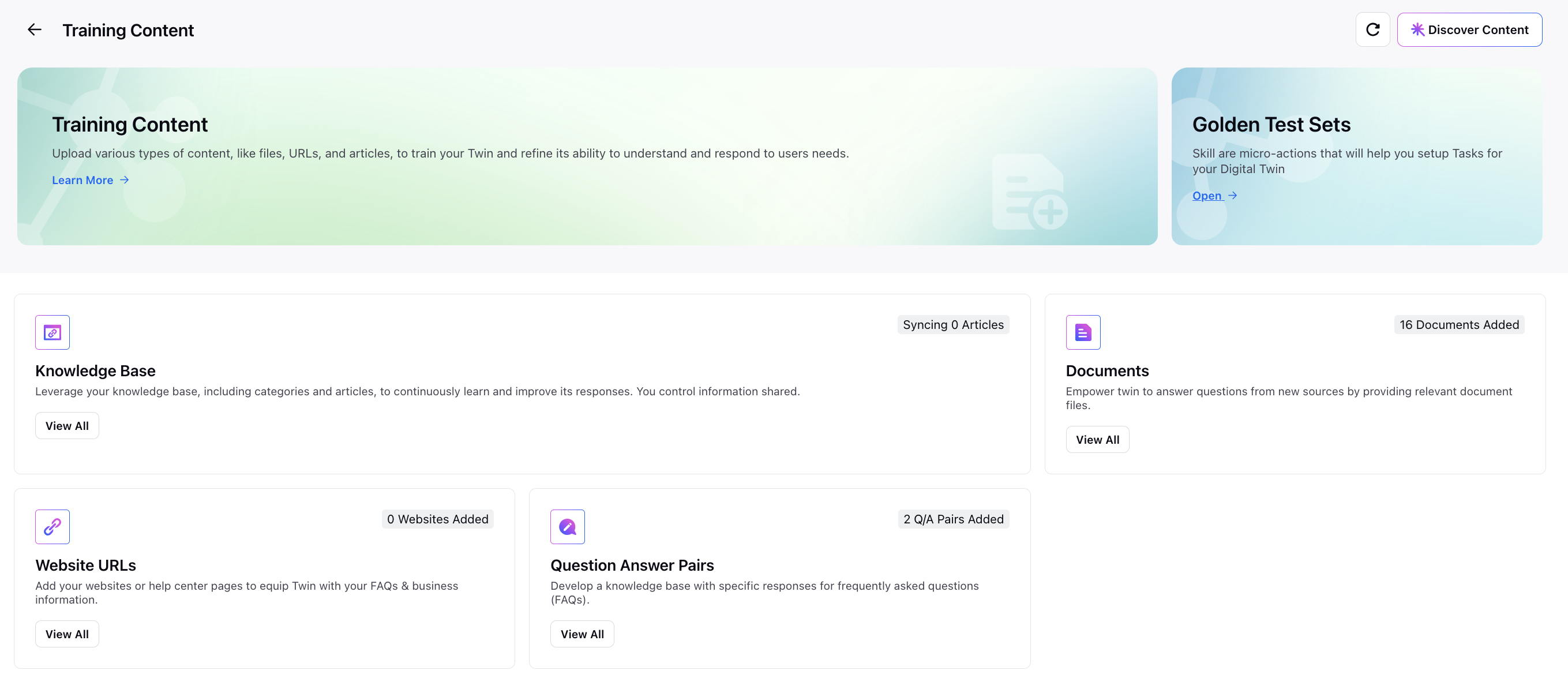

On the Training Content window, click Open within the Golden Test Sets box at the top right to navigate to the Golden Test Set Classification Report window.

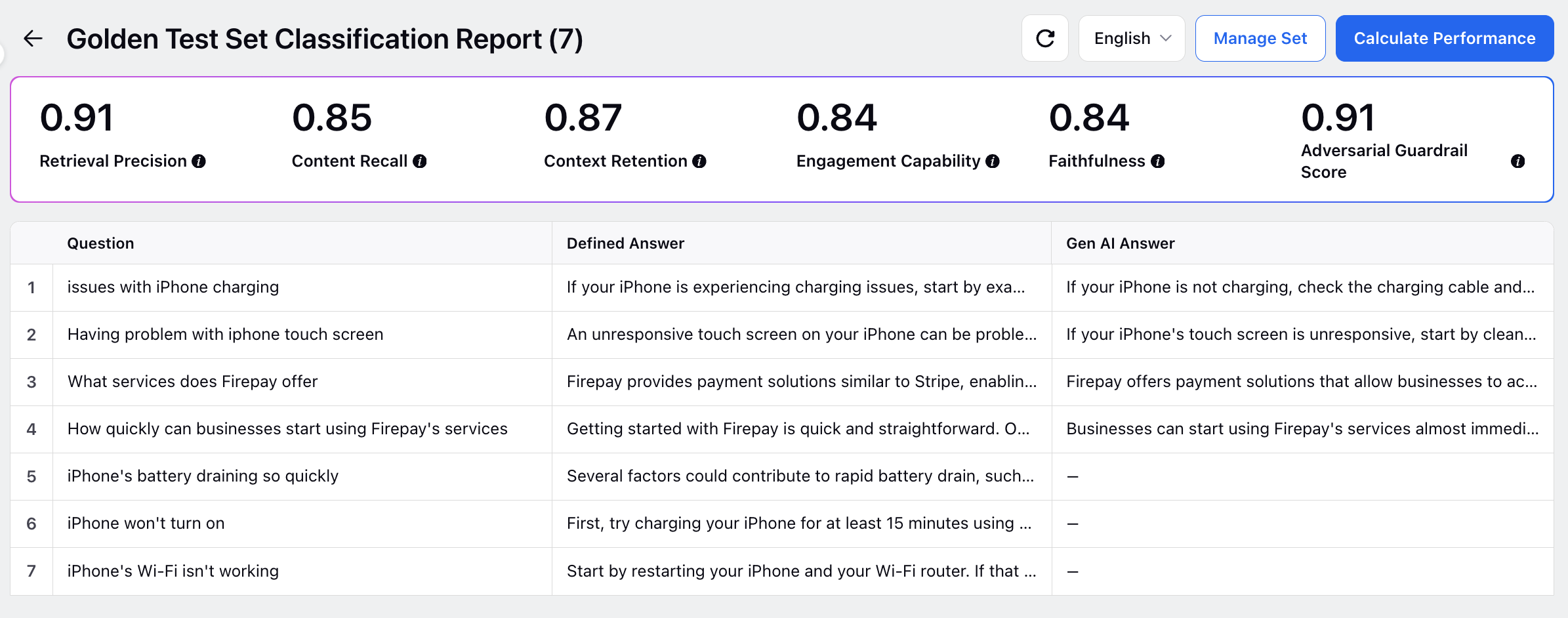

On the Golden Test Set Classification Report window, click Manage Set at the top.

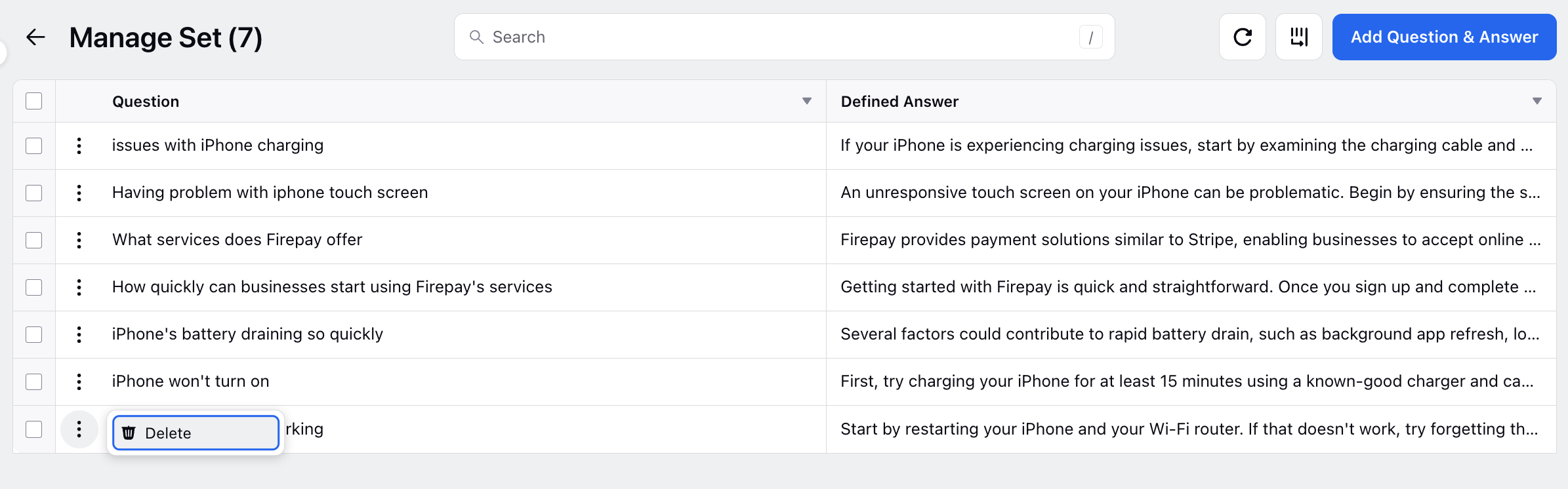

On the Manage Set window, click Add Question & Answer in the top right corner.

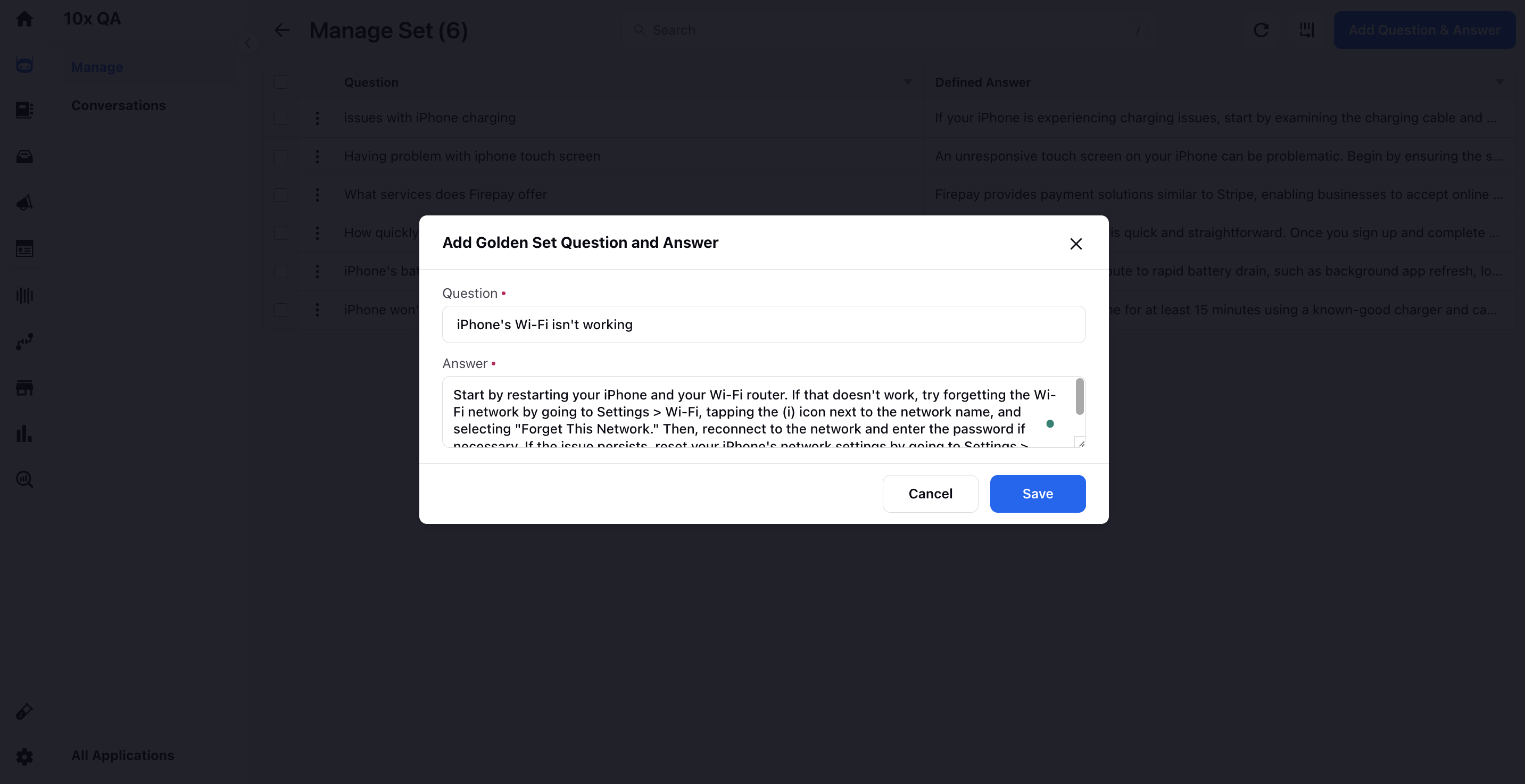

On the Add Golden Set Question & Answer window, input the question and its corresponding ideal answer. Click Save to store the information. You can add as many questions and answers as needed.

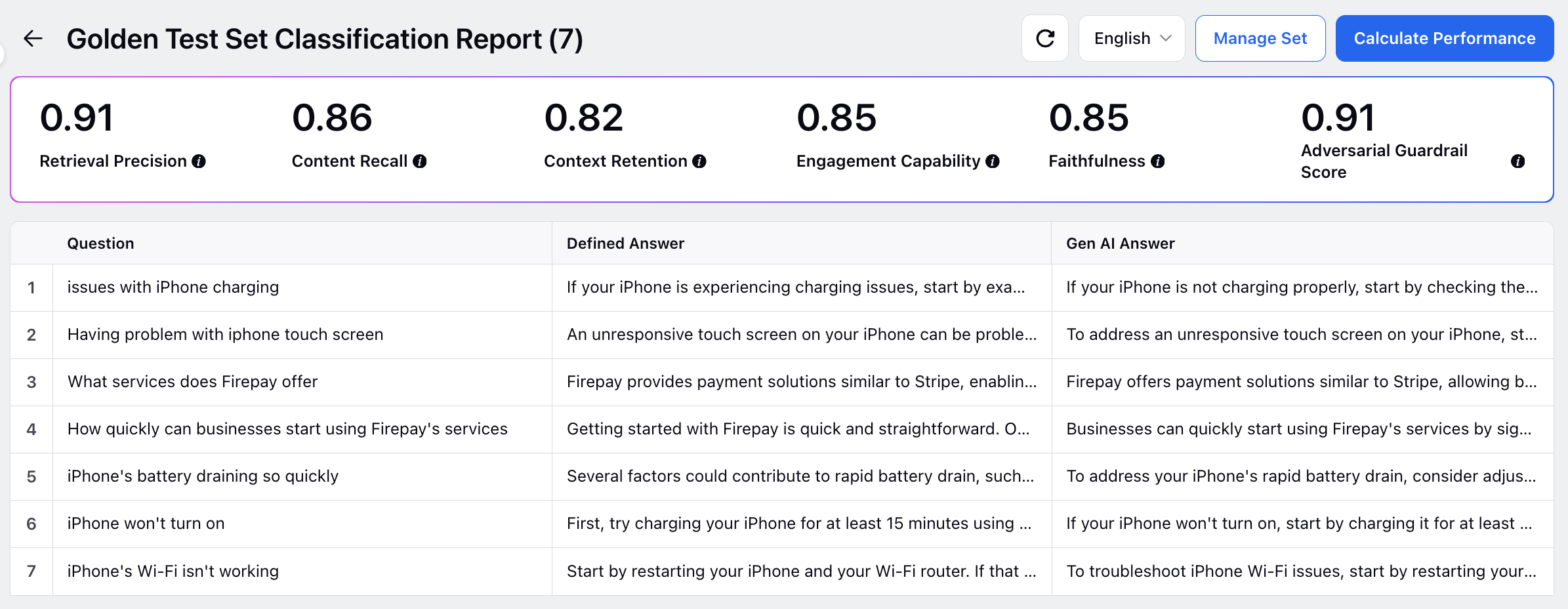

Navigate back to the Golden Test Set Classification Report window and click Calculate Performance at the top. The Gen AI Answer column will be populated with AI-generated responses based on the imported content. You can hover over each response to read it and evaluate its quality and relevance. This allows you to compare it with the ideal response that you've provided.

You can also access various scores at the top of the window:

Retrieval Precision: This metric evaluates the precision of the information retrieved by the AI model.

Content Recall: It measures the amount of relevant information correctly recollected and returned by the AI model.

Context Retention: This metric assesses the AI model's ability to maintain a consistent flow and depth in a conversation.

Engagement Capability: This score considers factors such as grammar, NLP metrics, and explainability to evaluate the AI model's ability to engage users effectively.

Faithfulness: It measures how accurately the AI model produces correct responses and also evaluates the extent to which the model generates incorrect or "hallucinated" responses.

Adversarial Guardrail Score: This metric evaluates the AI model's robustness and its protection against jailbreak attempts, assessing its efficiency in upholding guardrails.